kubesphere官方文档:https://v3-1.docs.kubesphere.io/zh/docs/ 多节点安装文档:https://v3-1.docs.kubesphere.io/zh/docs/installing-on-linux/introduction/multioverview/ 离线安装官方文档:https://v3-1.docs.kubesphere.io/zh/docs/installing-on-linux/introduction/air-gapped-installation/

在足够安全的外部防护下,可以永久关闭selinux

setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config systemctl stop firewalld && systemctl disable firewalld swapoff -a sed -i 's/.*swap.*/#&/' /etc/fstab

docker在线安装详细教程---安装

温馨提示:以下为政务内网的离线安装~

选择合适的docker版本

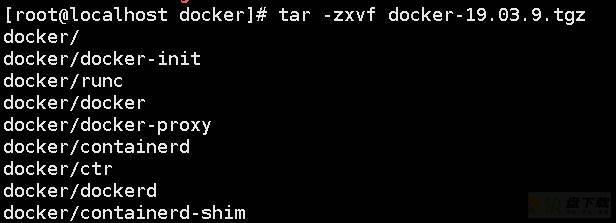

tar -zxvf docker-19.03.9.tgz

解压的docker文件夹全部移动至/usr/bin目录

cp docker/* /usr/bin/

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify ExecStart=/usr/bin/dockerd ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

#依次执行以下脚本 vi /etc/systemd/system/docker.service chmod +x /etc/systemd/system/docker.service systemctl daemon-reload && systemctl start docker && systemctl enable docker.service docker -v

vi /etc/docker/daemon.json填入以下内容:

{

"registry-mirrors": [

"https://sq9p56f6.mirror.aliyuncs.com"

],

"insecure-registries": ["IP:8088"],

"exec-opts":["native.cgroupdriver=systemd"]

} Docker-Compose下载路径:https://github.com/docker/compose/releases/download/1.24.1/docker-compose-Linux-x86_64

选择合适的docker版本

#依次执行一下命令 #重命名 mv docker-compose-Linux-x86_64 docker-compose #修改权限 chmod +x docker-compose #将docker-compose文件移动到了/usr/local/bin mv docker-compose /usr/local/bin #打开/etc/profile文件 vi /etc/profile #添加内容到文件末尾即可,然后保存退出 export PATH=$JAVA_HOME:/usr/local/bin:$PATH #重新加载配置文件,让其生效 source /etc/profile #测试 docker-compose

Harbor详细教程---安装

默认你有harbor包

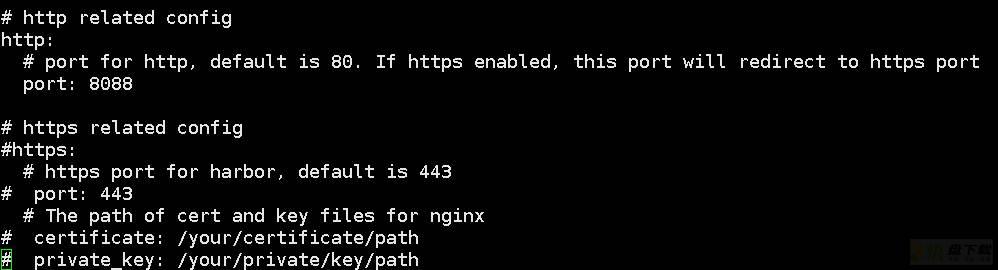

#解压 tar -zxvf harbor-offline-installer-v2.5.0-rc4.tgz cp harbor.yml.tmpl harbor.yml #注释掉htps的配置内容,配置htp相关的参数,主要是hostname,port,其他都可以不用动。 vi harbor.yml #启动 ./prepare ./install.sh

您可以根据自己的需求变更下载的 Kubernetes 版本。安装 KubeSphere v3.1.1 的建议 Kubernetes 版本:v1.17.9,v1.18.8,v1.19.8 以及 v1.20.4。如果不指定 Kubernetes 版本,KubeKey 将默认安装 Kubernetes v1.19.8。有关受支持的 Kubernetes 版本的更多信息,请参见支持矩阵。 运行脚本后,会自动创建一个文件夹 kubekey。请注意,您稍后创建集群时,该文件和 kk 必须放在同一个目录下。

#已有就无需下载 #下载镜像清单: curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/images-list.txt #下载 offline-installation-tool.sh curl -L -O https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/offline-installation-tool.sh

#文件可执行。 chmod +x offline-installation-tool.sh # IP:8088->私服仓库的ip+端口 ./offline-installation-tool.sh -l images-list.txt -d ./kubesphere-images -r IP:8088/library #解压进入目录: chmod +x kk #指定对应版本 kk文件中有yaml就无需操作此步 ./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1 -f config-sample.yaml #编辑,yaml文件放在后 vim config-sample.yaml #指定要纳入集群的机器 spec.hosts #指定主节点 spec.roleGroups.etcd spec.roleGroups.master #指定工作节点 spec.roleGroups.worker #添加私服地址 registry.insecureRegistries #执行 ./kk create cluster -f config-sample.yaml

config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master14, address: 192.168.239.14, internalAddress: 192.168.239.14, port: 22, user: root, password: 123456}

- {name: node22, address: 192.168.239.22, internalAddress: 192.168.239.22, port: 22, user: root, password: 123456}

- {name: node23, address: 192.168.239.23, internalAddress: 192.168.239.23, port: 22, user: root, password: 123456}

roleGroups:

etcd:

- master14

master:

- master14

worker:

- node22

- node23

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.19.8

imageRepo: kubesphere

clusterName: cluster.local

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: ["192.168.239.24:8088"]

privateRegistry: 192.168.239.24:8088/library

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

redis:

enabled: false

redisVolumSize: 2Gi

openldap:

enabled: false

openldapVolumeSize: 2Gi

minioVolumeSize: 20Gi

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

es:

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true

port: 30880

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

devops:

enabled: true

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

ruler:

enabled: true

replicas: 2

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

storageClass: ""

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: [] 适用于能访问互联网的环境

#找一个合适的目录 export KKZONE=cn; curl -sfL https://get-kk.kubesphere.io | VERSION=v1.1.1 sh - chmod +x kk ./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.1 -f config-sample.yaml #编辑yaml vim config-sample.yaml #添加私服地址 registry.insecureRegistries #指定要纳入集群的机器 spec.hosts #指定主节点 spec.roleGroups.etcd spec.roleGroups.master #指定工作节点 spec.roleGroups.worker #执行 ./kk create cluster -f config-sample.yaml

自行安装docker。在未安装docker时,kk会自动安装docker,但建议docker自行安装,并且进行相应配置的设置。 在机器硬盘不够大时,建议挂载外部存储。docker默认挂载目录是/var/lib/docker,绝大多数下本机硬盘不可能有这么大,必须要将docker容器的存储挂载到外置存储上去。 设置好镜像私服。由于后续拉各类包都要依赖私仓,如果现在不设置,在k8s安装完成后发现无法拉镜像,这时再去改daemon.json需要重启docker,这是一个比较危险的行为。 在安装前可以先启用部分插件,但是尽量在安装后再去启用,以免超过k8安装的超时时间(简单来说就是config-sample.yaml中的参数,除了私仓、机器配置,其他的一概不要动) 安装完成后会提示访问地址是多少,按照控制台提示去登录即可,账号密码都在控制台上,安装过程大概要20分钟左右 K8S集群的关闭是比较危险的行为,存在掉电风险的客户现场,不是很建议使用本方案,虽然掉电后无法启动集群是小概率事件,但是一旦出现就要卸载集群重装,虽然只是一句命令的事,但是会有几十分钟的空窗时间。如果一定要部署,请一定要有备用的逻辑部署方案可以随时切换。

步骤:

开启日志组件启动可插拔组件创建企业空间创建项目管理配置镜像仓库安装相应的环境(nacos、xxljob、redis、mysql)自制服务应用访问 -> OK建议mysql外部安装

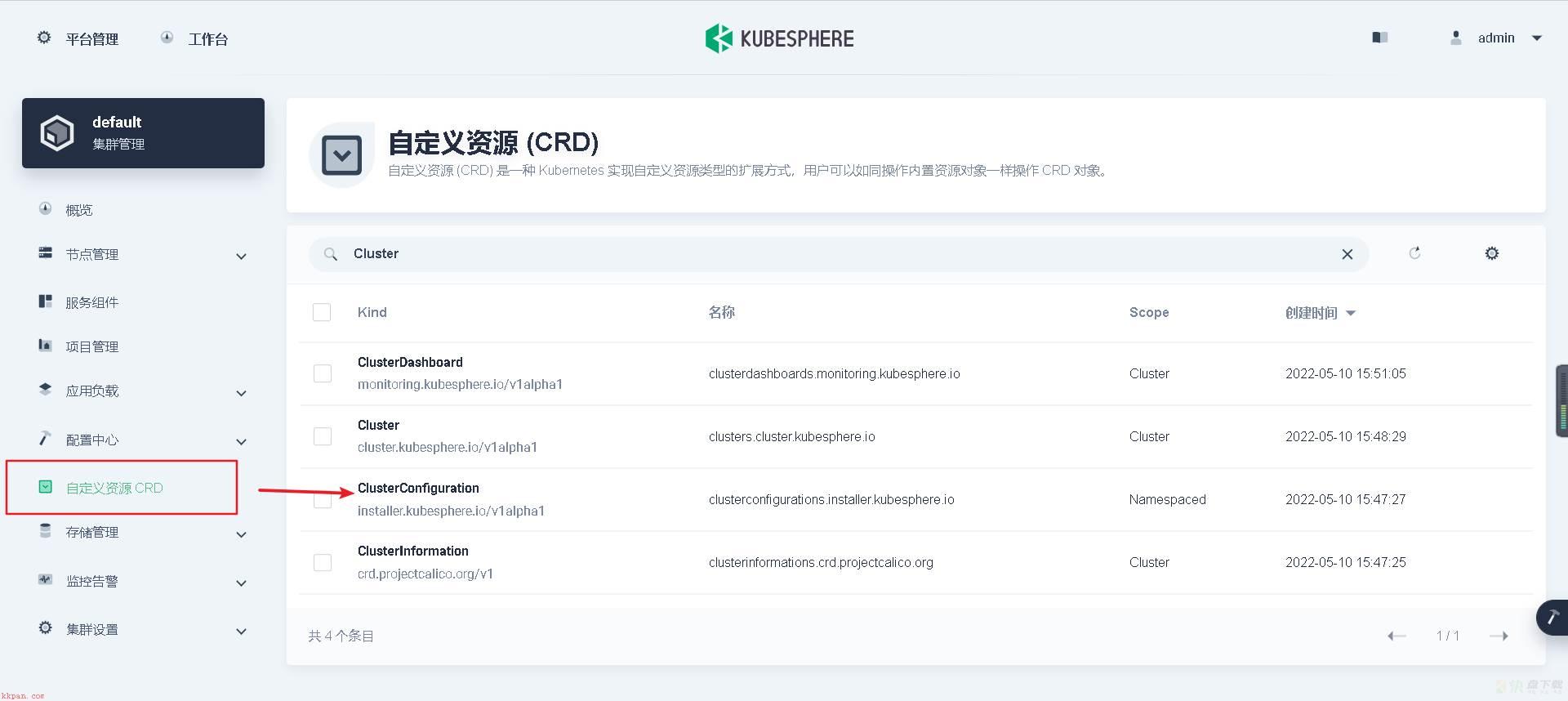

开启日志组件启动可插拔组件

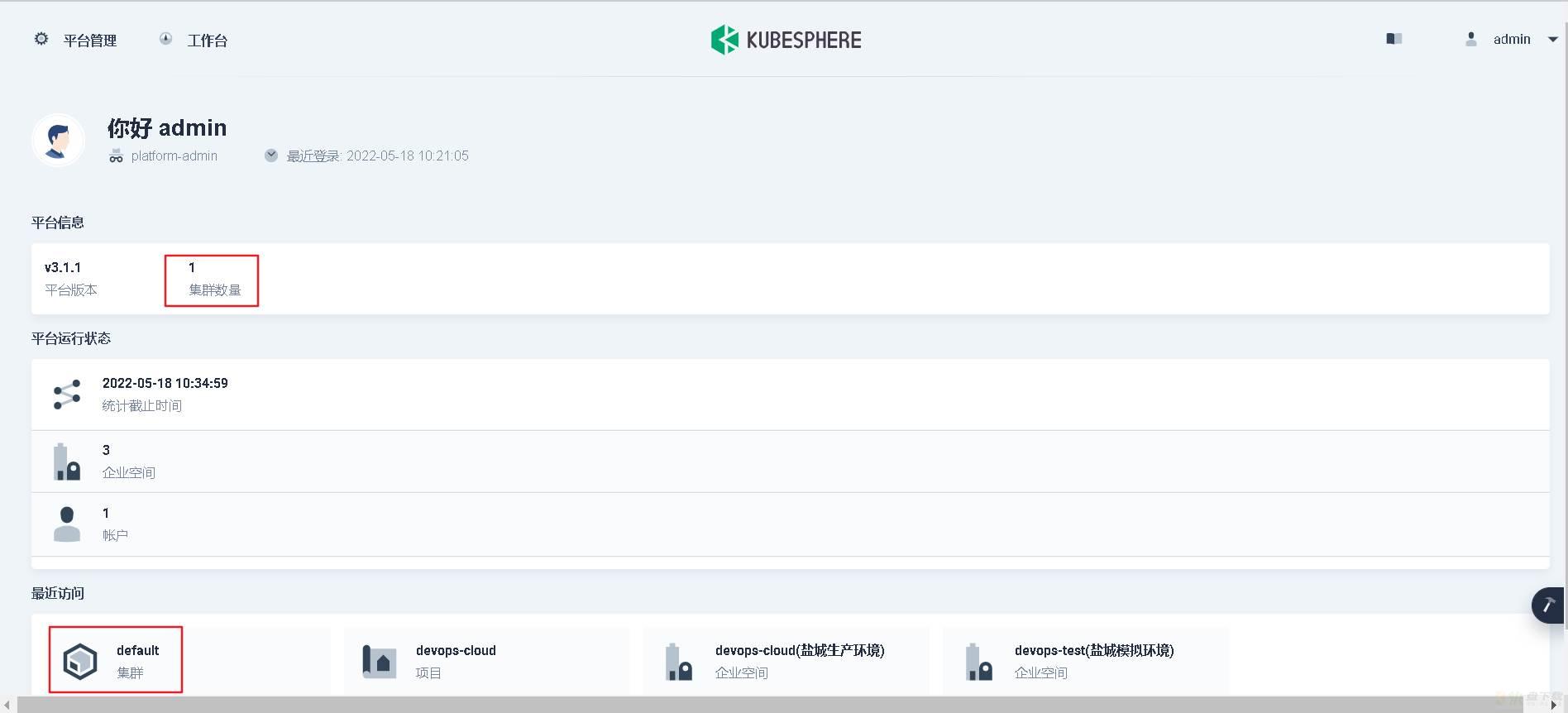

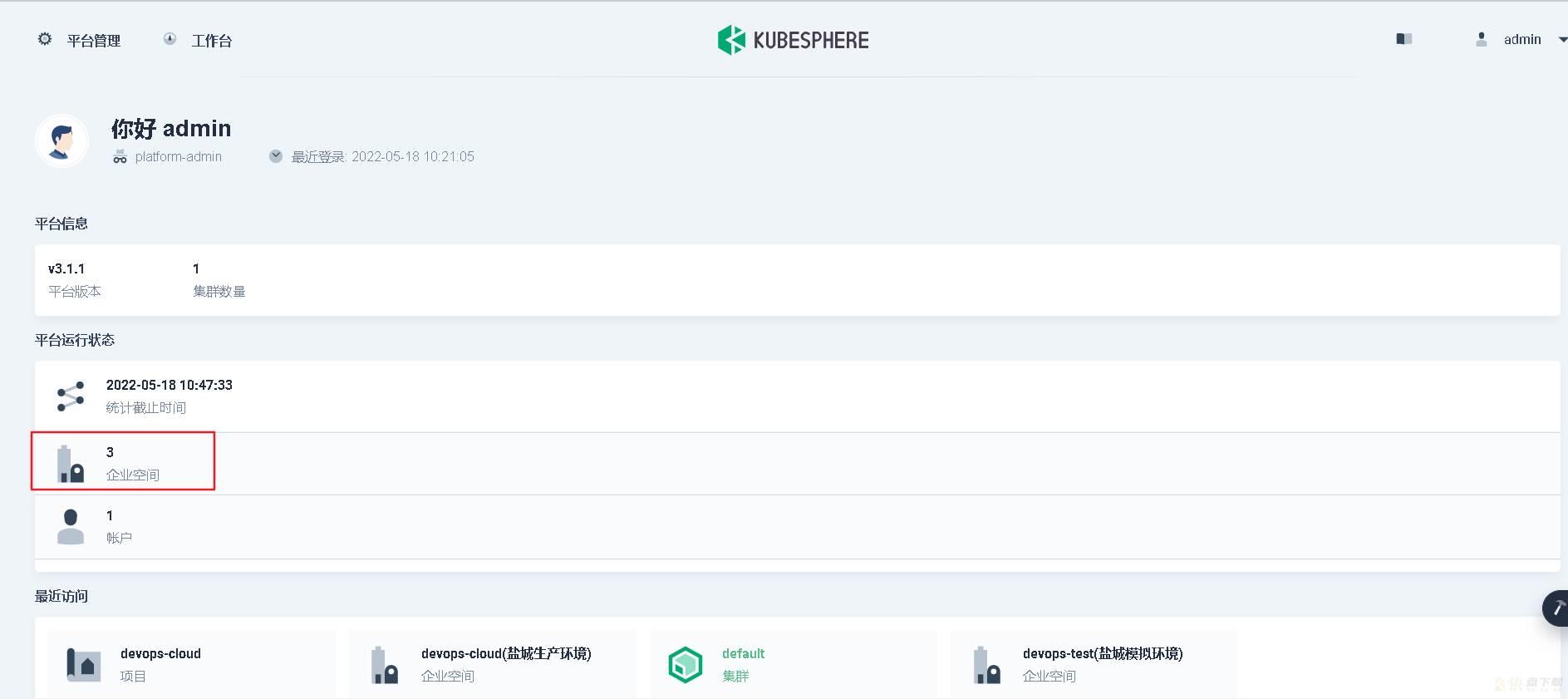

进入default集群管理

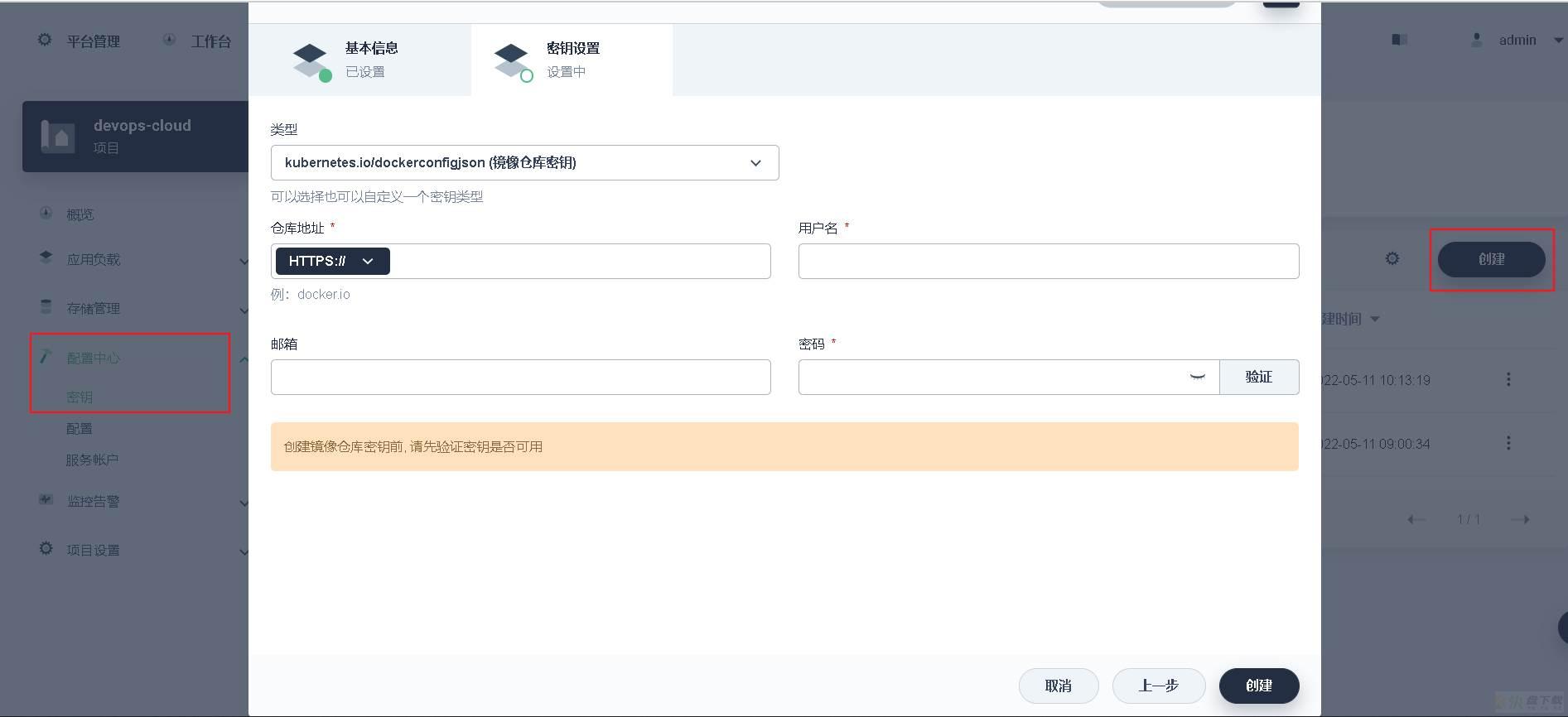

配置镜像仓库

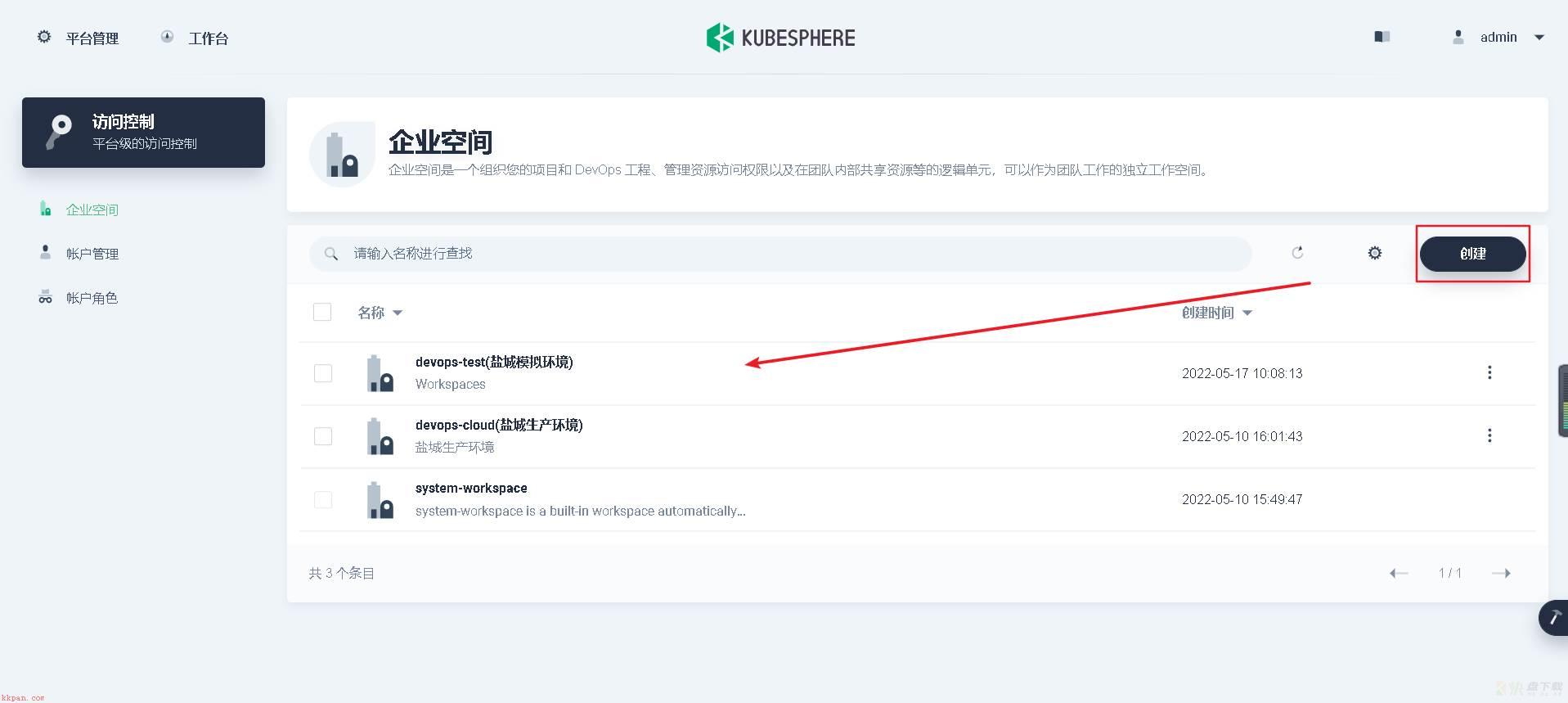

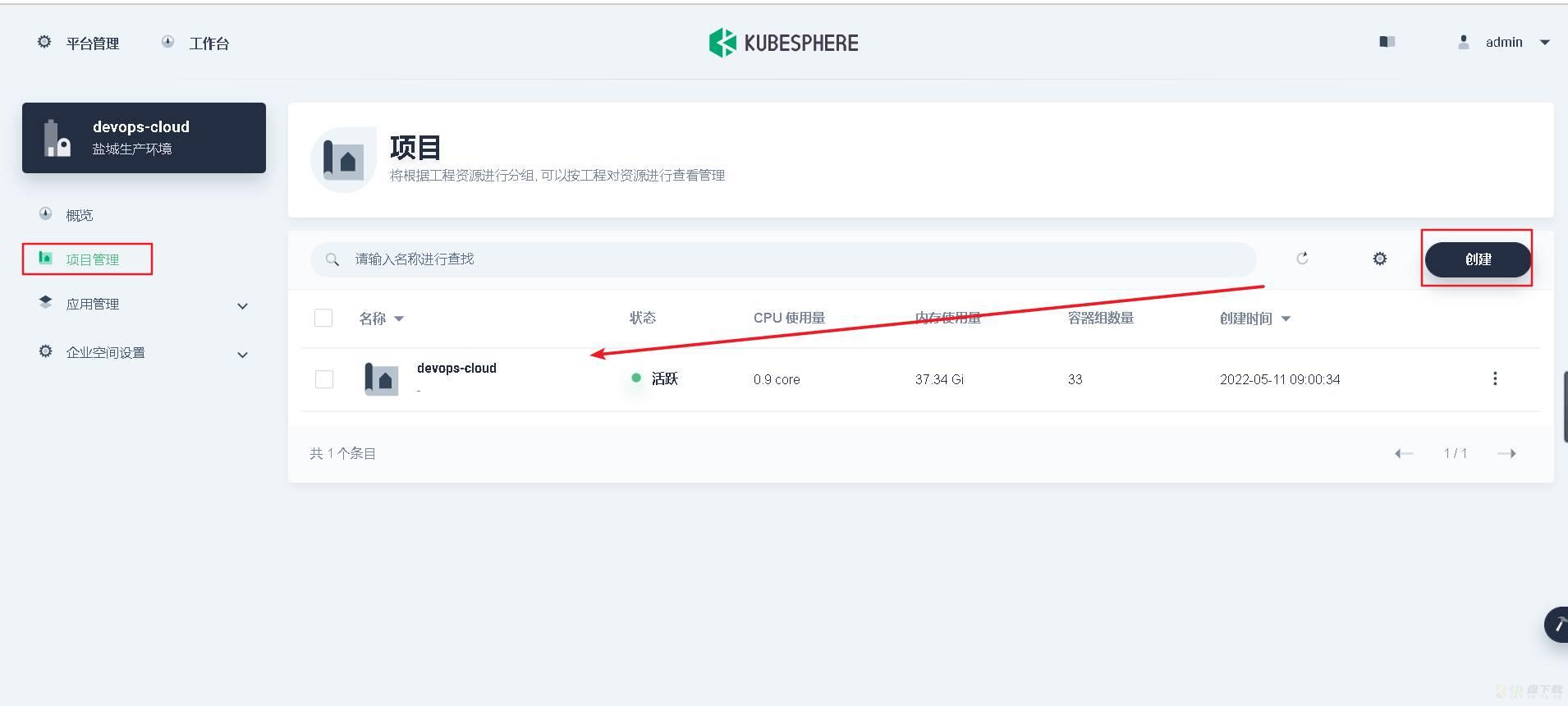

进入企业空间管理

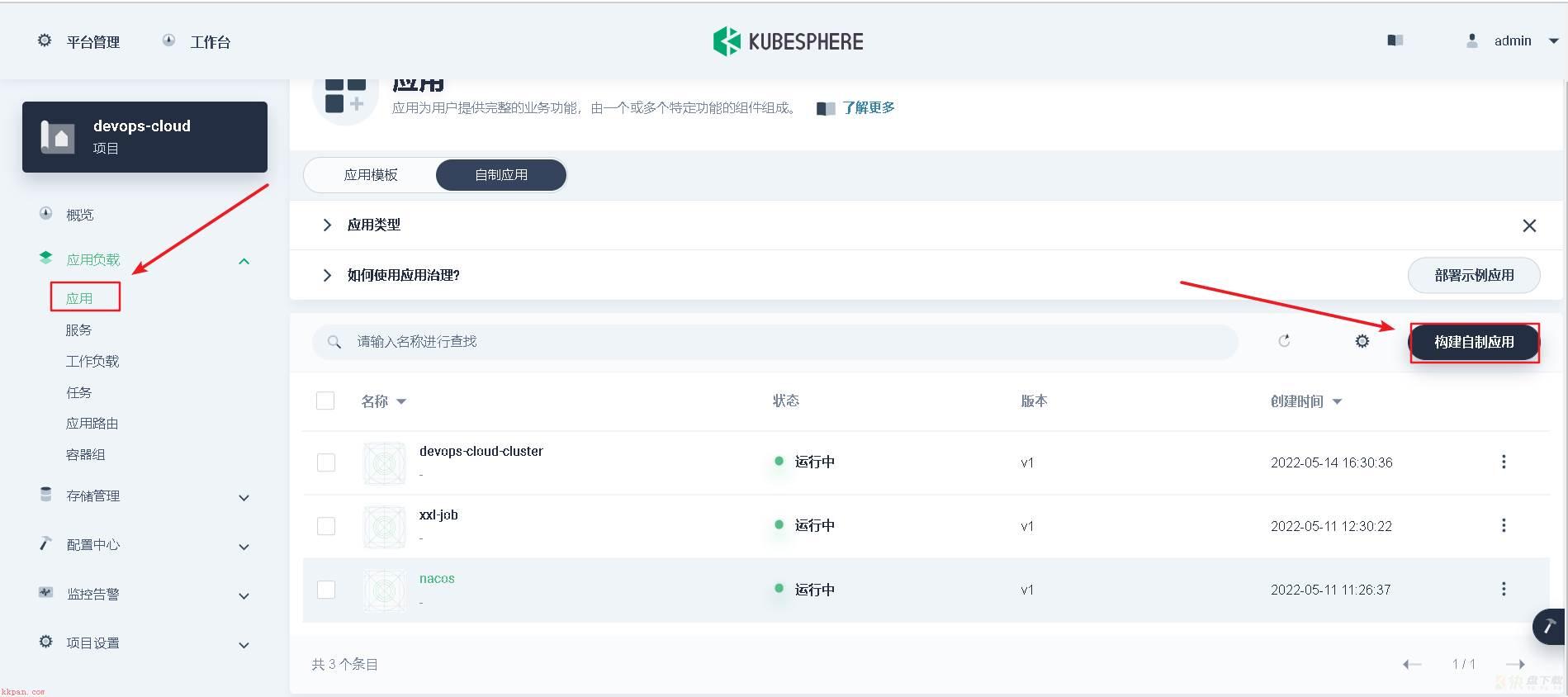

本文采用的是yml的方式进行自制应用的构建,不同的项目只需要修改企业空间、镜像仓库以及数据库即可。温馨提示:本文是基于已有数据库以及项目上传到镜像仓库的前提下进行操作~

(1)nacos

apiVersion: app.k8s.io/v1beta1

kind: Application

metadata:

name: nacos

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

selector:

matchLabels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

addOwnerRef: true

componentKinds:

- group: ''

kind: Service

- group: apps

kind: Deployment

- group: apps

kind: StatefulSet

- group: extensions

kind: Ingress

- group: servicemesh.kubesphere.io

kind: Strategy

- group: servicemesh.kubesphere.io

kind: ServicePolicy

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

name: nacos-ingress-i52okm

spec:

rules: []

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

name: nacos-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

template:

metadata:

labels:

version: v1

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-iy6dn0

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/common/nacos-server:2.0.2'

ports:

- name: tcp-8848

protocol: TCP

containerPort: 8848

servicePort: 8848

- name: tcp-9848

protocol: TCP

containerPort: 9848

servicePort: 9848

env:

- name: MYSQL_SERVICE_DB_NAME

value: nacos

- name: MYSQL_SERVICE_DB_PARAM

value: >-

useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&useSSL=false&zeroDateTimeBehavior=convertToNull&serverTimezone=Asia/Shanghai

- name: MYSQL_SERVICE_HOST

value: IP

- name: MYSQL_SERVICE_PASSWORD

value: 数据库密码

- name: MYSQL_SERVICE_PORT

value: '3306'

- name: MYSQL_SERVICE_USER

value: root

- name: SPRING_DATASOURCE_PLATFORM

value: mysql

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: nacos

readOnly: false

mountPath: /home/nacos/data

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: nacos

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: nacos

spec:

sessionAffinity: None

selector:

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

template:

metadata:

labels:

version: v1

app: nacos

app.kubernetes.io/version: v1

app.kubernetes.io/name: nacos

ports:

- name: tcp-8848

protocol: TCP

port: 8848

targetPort: 8848

- name: tcp-9848

protocol: TCP

port: 9848

targetPort: 9848

type: NodePort (2)xxl-job

apiVersion: app.k8s.io/v1beta1

kind: Application

metadata:

name: xxl-job

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

selector:

matchLabels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

addOwnerRef: true

componentKinds:

- group: ''

kind: Service

- group: apps

kind: Deployment

- group: apps

kind: StatefulSet

- group: extensions

kind: Ingress

- group: servicemesh.kubesphere.io

kind: Strategy

- group: servicemesh.kubesphere.io

kind: ServicePolicy

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

name: xxl-job-ingress-j6ldh4

spec:

rules: []

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

name: xxl-job-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

template:

metadata:

labels:

version: v1

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-7qspzi

imagePullPolicy: IfNotPresent

pullSecret: harbor

image: 'IP:8088/common/xxl-job-admin'

ports:

- name: http-8094

protocol: TCP

containerPort: 8094

servicePort: 8094

env:

- name: MYSQL_SERVICE_DB_NAME

value: xxl-job

- name: MYSQL_SERVICE_HOST

value: IP

- name: MYSQL_SERVICE_PORT

value: '3306'

- name: MYSQL_SERVICE_PASSWORD

value: 数据库密码

- name: MYSQL_SERVICE_USER

value: root

- name: SPRING_MAIL_HOST

value: imap.163.com

- name: SPRING_MAIL_PORT

value: '143'

- name: SPRING_MAIL_USERNAME

value: xk_admin@163.com

- name: SPRING_MAIL_FROM

value: xk_admin@163.com

- name: SPRING_MAIL_PASSWORD

value: MDBSBUJZFYASSOUS

volumeMounts:

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: xxl-job

spec:

sessionAffinity: None

selector:

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

template:

metadata:

labels:

version: v1

app: xxl-job

app.kubernetes.io/version: v1

app.kubernetes.io/name: xxl-job

ports:

- name: http-8094

protocol: TCP

port: 8094

targetPort: 8094

type: NodePort (3)devops-cloud-cluster

apiVersion: app.k8s.io/v1beta1

kind: Application

metadata:

name: devops-cloud-cluster

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

selector:

matchLabels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

addOwnerRef: true

componentKinds:

- group: ''

kind: Service

- group: apps

kind: Deployment

- group: apps

kind: StatefulSet

- group: extensions

kind: Ingress

- group: servicemesh.kubesphere.io

kind: Strategy

- group: servicemesh.kubesphere.io

kind: ServicePolicy

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: devops-cloud

labels:

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-cloud-cluster-ingress-dydnej

spec:

rules: []

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-cloud-gateway-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 4

selector:

matchLabels:

version: v1

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-n68t1b

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-cloud-gateway:latest'

ports:

- name: tcp-10001

protocol: TCP

containerPort: 10001

servicePort: 10001

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-cloud-gateway

spec:

sessionAffinity: None

selector:

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-cloud-gateway

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10001

protocol: TCP

port: 10001

targetPort: 10001

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-system-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-bejgi2

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-system'

ports:

- name: tcp-10003

protocol: TCP

containerPort: 10003

servicePort: 10003

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-system

spec:

sessionAffinity: None

selector:

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-system

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10003

protocol: TCP

port: 10003

targetPort: 10003

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-trade-aggregate-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 4

selector:

matchLabels:

version: v1

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-v6eeyv

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-trade-aggregate'

ports:

- name: tcp-10005

protocol: TCP

containerPort: 10005

servicePort: 10005

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-trade-aggregate

spec:

sessionAffinity: None

selector:

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-aggregate

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10005

protocol: TCP

port: 10005

targetPort: 10005

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-trade-main-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 4

selector:

matchLabels:

version: v1

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-omhakh

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-trade-main'

ports:

- name: tcp-10006

protocol: TCP

containerPort: 10006

servicePort: 10006

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-trade-main

spec:

sessionAffinity: None

selector:

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-main

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10006

protocol: TCP

port: 10006

targetPort: 10006

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-trade-object-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 4

selector:

matchLabels:

version: v1

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-rsvqcf

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-trade-object'

ports:

- name: tcp-10007

protocol: TCP

containerPort: 10007

servicePort: 10007

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-trade-object

spec:

sessionAffinity: None

selector:

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-object

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10007

protocol: TCP

port: 10007

targetPort: 10007

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-trade-pay-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-7xq5cc

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-trade-pay'

ports:

- name: tcp-10008

protocol: TCP

containerPort: 10008

servicePort: 10008

- name: tcp-8201

protocol: TCP

containerPort: 8201

servicePort: 8201

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-trade-pay

spec:

sessionAffinity: None

selector:

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-pay

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10008

protocol: TCP

port: 10008

targetPort: 10008

- name: tcp-8201

protocol: TCP

port: 8201

targetPort: 8201

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-trade-process-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-7e9lc8

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-trade-process'

ports:

- name: tcp-10009

protocol: TCP

containerPort: 10009

servicePort: 10009

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-trade-process

spec:

sessionAffinity: None

selector:

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-trade-process

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10009

protocol: TCP

port: 10009

targetPort: 10009

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-websocket-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-1vn5nf

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-websocket'

ports:

- name: tcp-10004

protocol: TCP

containerPort: 10004

servicePort: 10004

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-websocket

spec:

sessionAffinity: None

selector:

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-websocket

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10004

protocol: TCP

port: 10004

targetPort: 10004

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-workflow-core-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-u985hw

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-workflow-core'

ports:

- name: tcp-10010

protocol: TCP

containerPort: 10010

servicePort: 10010

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-workflow-core

spec:

sessionAffinity: None

selector:

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-workflow-core

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10010

protocol: TCP

port: 10010

targetPort: 10010

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

name: devops-sign-v1

annotations:

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

version: v1

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

containers:

- name: container-u985hw

imagePullPolicy: Always

pullSecret: harbor

image: 'IP:8088/prod/devops-sign'

ports:

- name: tcp-10013

protocol: TCP

containerPort: 10013

servicePort: 10013

volumeMounts:

- name: host-time

mountPath: /etc/localtime

readOnly: true

- name: app

readOnly: false

mountPath: /app

serviceAccount: default

affinity: {}

initContainers: []

volumes:

- hostPath:

path: /etc/localtime

type: ''

name: host-time

- name: app

emptyDir: {}

imagePullSecrets:

- name: harbor

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

---

apiVersion: v1

kind: Service

metadata:

namespace: devops-cloud

labels:

version: v1

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

annotations:

kubesphere.io/serviceType: statelessservice

servicemesh.kubesphere.io/enabled: 'false'

name: devops-sign

spec:

sessionAffinity: None

selector:

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

template:

metadata:

labels:

version: v1

app: devops-sign

app.kubernetes.io/version: v1

app.kubernetes.io/name: devops-cloud-cluster

ports:

- name: tcp-10013

protocol: TCP

port: 10013

targetPort: 10013 修改pom连接配置

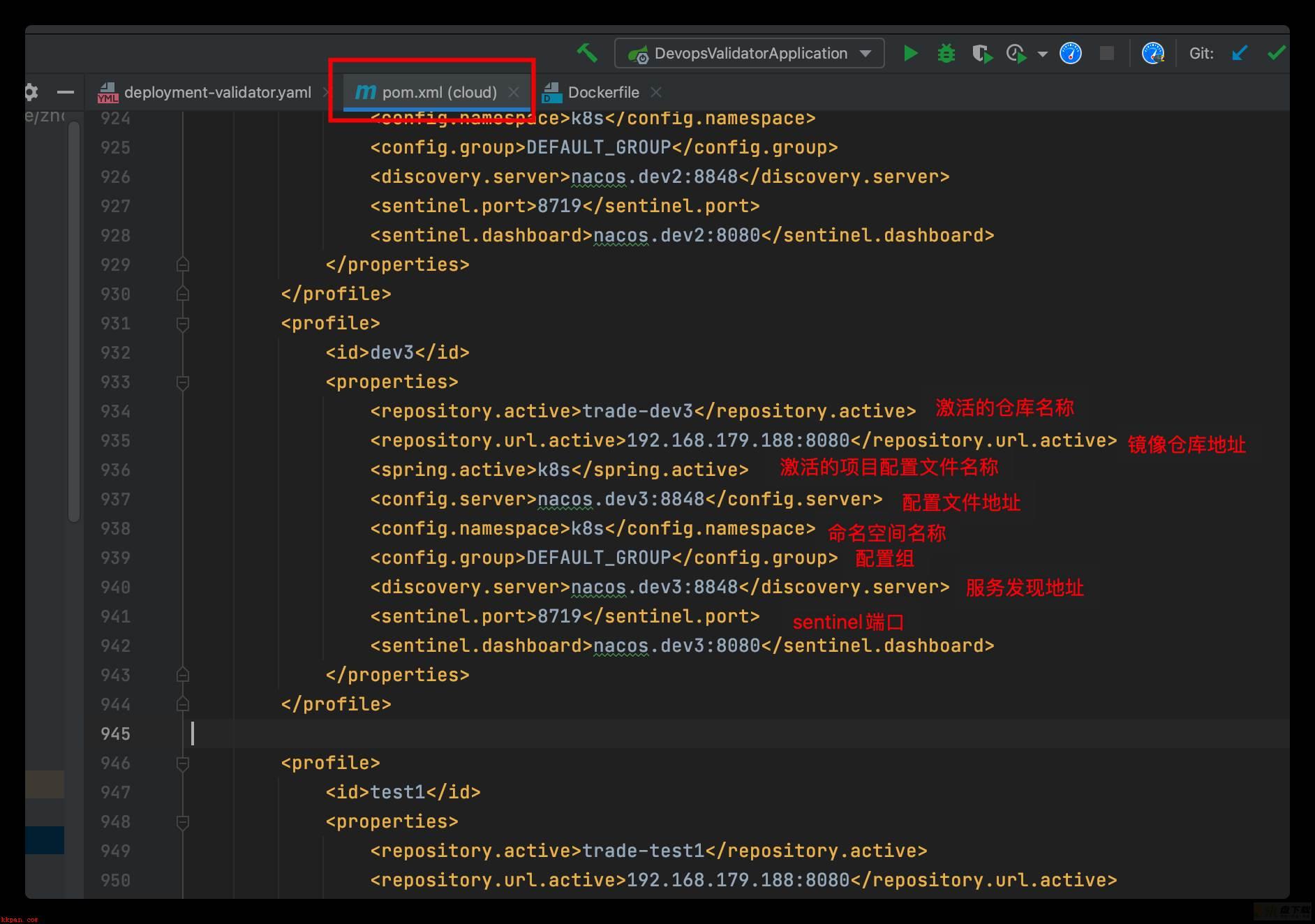

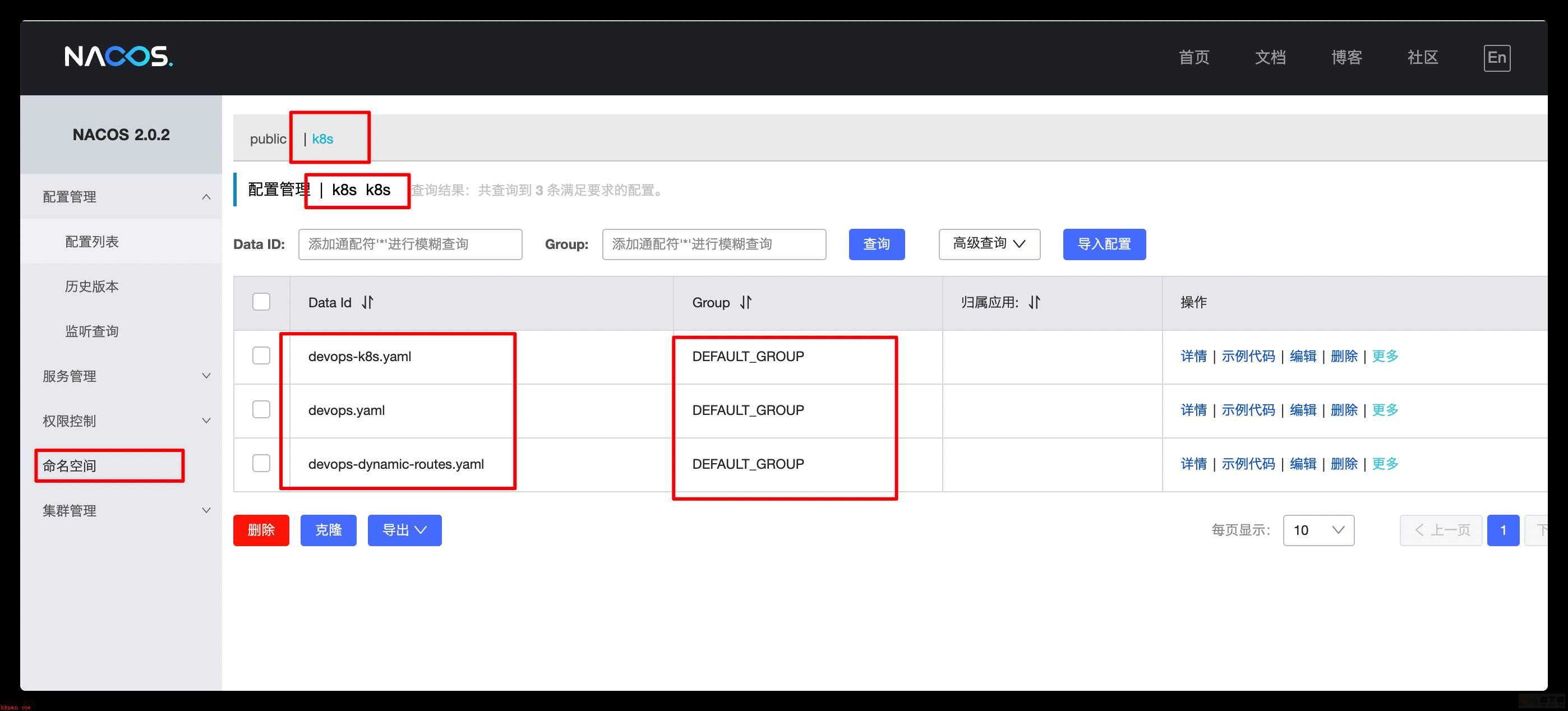

1、检查nacos的DNS是否一致

2、配置文件名称,组名是否一致

项目采用的是nacos

在kubesphere面板服务里查看nacos的外放访问端口,处于哪台服务器,ip+端口/nacos访问nacos

登录nacos添加项目配置文件(跟项目中pom所写保持一致)

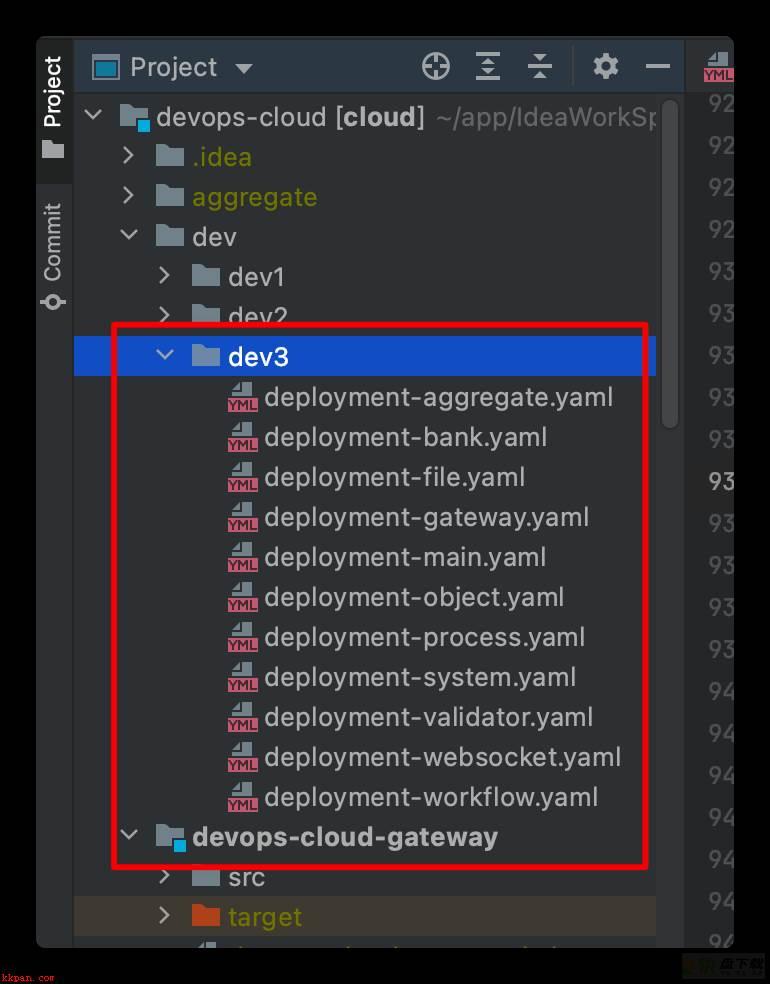

注意:每个服务端口不一致,jar包名称不一致

#设置镜像基础,jdk8

FROM java:8

#维护人员信息

MAINTAINER hetao

#设置镜像对外暴露端口

EXPOSE 10014

ENV TZ=PRC

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

#将当前 target 目录下的 jar 放置在根目录下,命名为 app.jar,推荐使用绝对路径。

ADD target/devops-validator.jar /devops-validator.jar

#执行启动命令

ENTRYPOINT java ${JVM:=-Xms2048m -Xmx2048m} -Djava.security.egd=file:/dev/./urandom -jar /devops-validator.jar 每个服务配置不一样,主要修改其中的 名称name,命名空间namespace,应用名称app,镜像源地址images,端口tcp

kind: Deployment

apiVersion: apps/v1

metadata:

name: devops-validator-v1

namespace: devops-cloud

labels:

app: devops-validator

app.kubernetes.io/name: devops-cloud-cluster

app.kubernetes.io/version: v1

version: v1

annotations:

deployment.kubernetes.io/reVision: '2'

kubesphere.io/creator: admin

servicemesh.kubesphere.io/enabled: 'false'

spec:

replicas: 1

selector:

matchLabels:

app: devops-validator

app.kubernetes.io/name: devops-cloud-cluster

app.kubernetes.io/version: v1

version: v1

template:

metadata:

labels:

app: devops-validator

app.kubernetes.io/name: devops-cloud-cluster

app.kubernetes.io/version: v1

version: v1

annotations:

kubesphere.io/restartedAt: '2021-12-02T05:13:43.487Z'

logging.kubesphere.io/logsidecar-config: '{}'

sidecar.istio.io/inject: 'false'

spec:

volumes:

- name: host-time

hostPath:

path: /etc/localtime

type: ''

- name: app

emptyDir: {}

containers:

- name: container-bejgi2

image: 'IP:8080/trade-devops-cloud/devops-validator'

ports:

- name: tcp-10014

containerPort: 10014

protocol: TCP

env:

- name: JVM

value: '-Xms256m -Xmx256m'

resources:

limits:

cpu: '1'

memory: 1000Mi

requests:

cpu: 500m

memory: 500Mi

volumeMounts:

- name: host-time

readOnly: true

mountPath: /etc/localtime

- name: app

mountPath: /app

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: Always

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

serviceAccountName: default

serviceAccount: default

securityContext: {}

imagePullSecrets:

- name: harbor

affinity: {}

schedulerName: default-scheduler

strategy:

type: Recreate

revisionHistoryLimit: 10

progressDeadlineSeconds: 600

默认生成

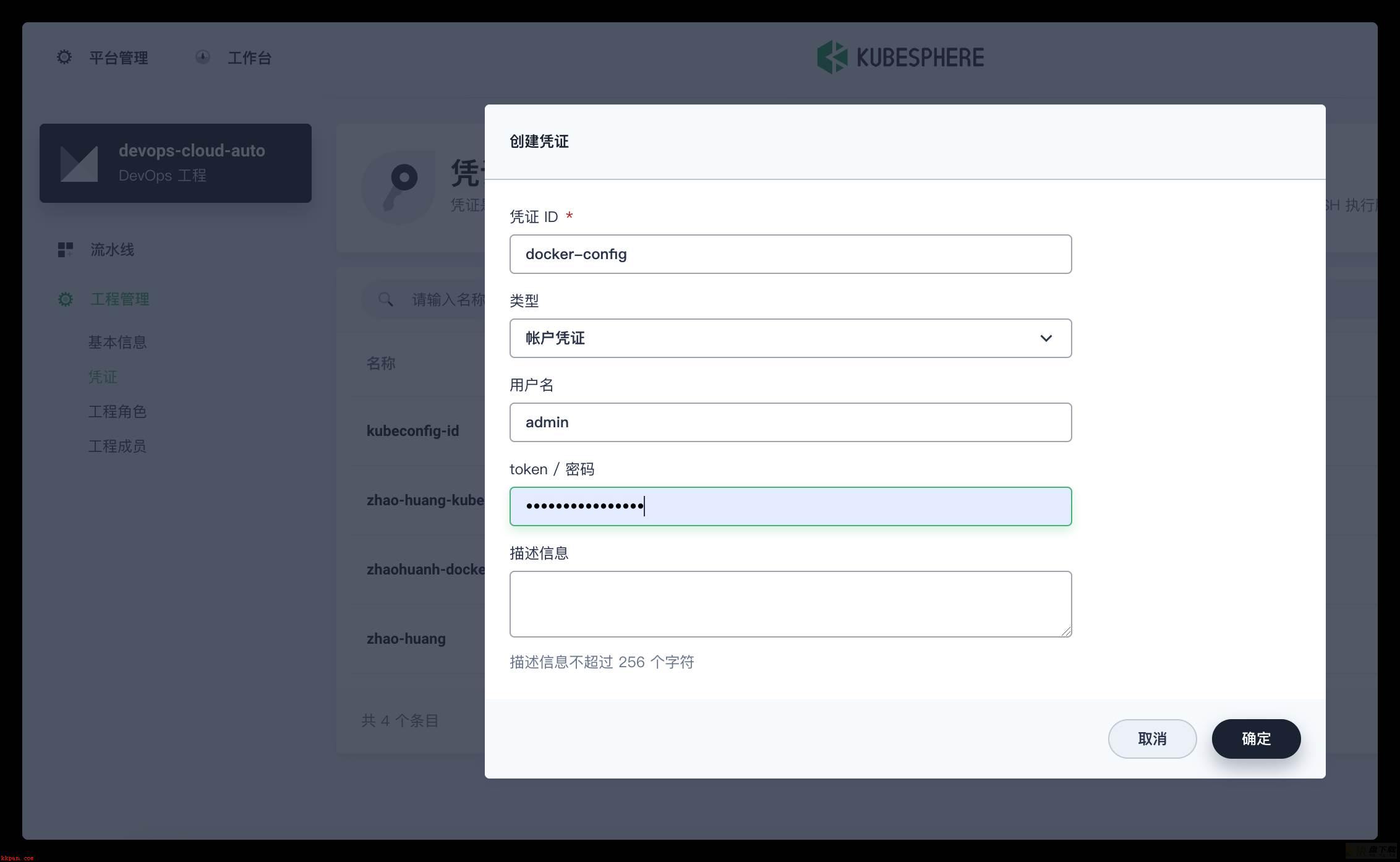

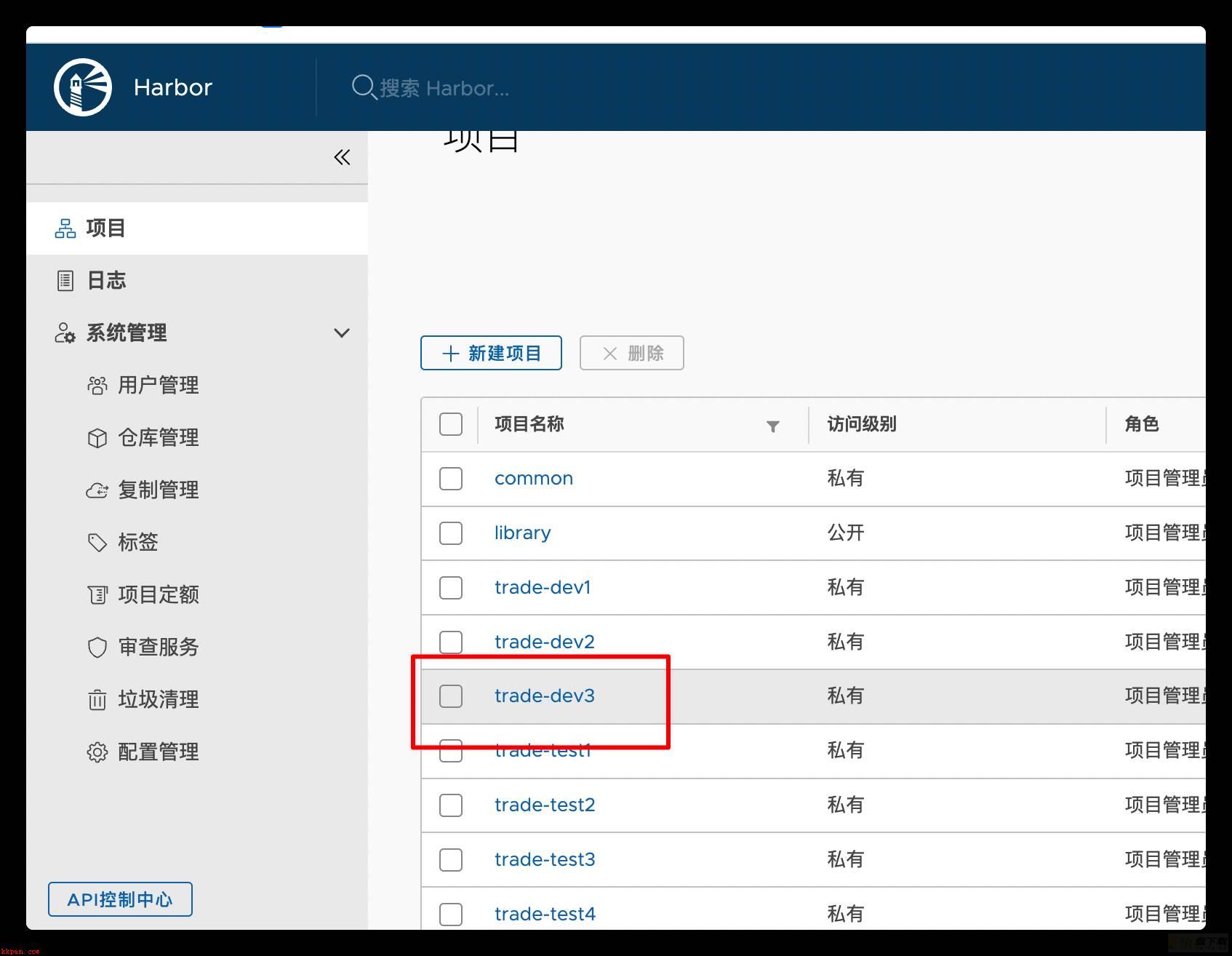

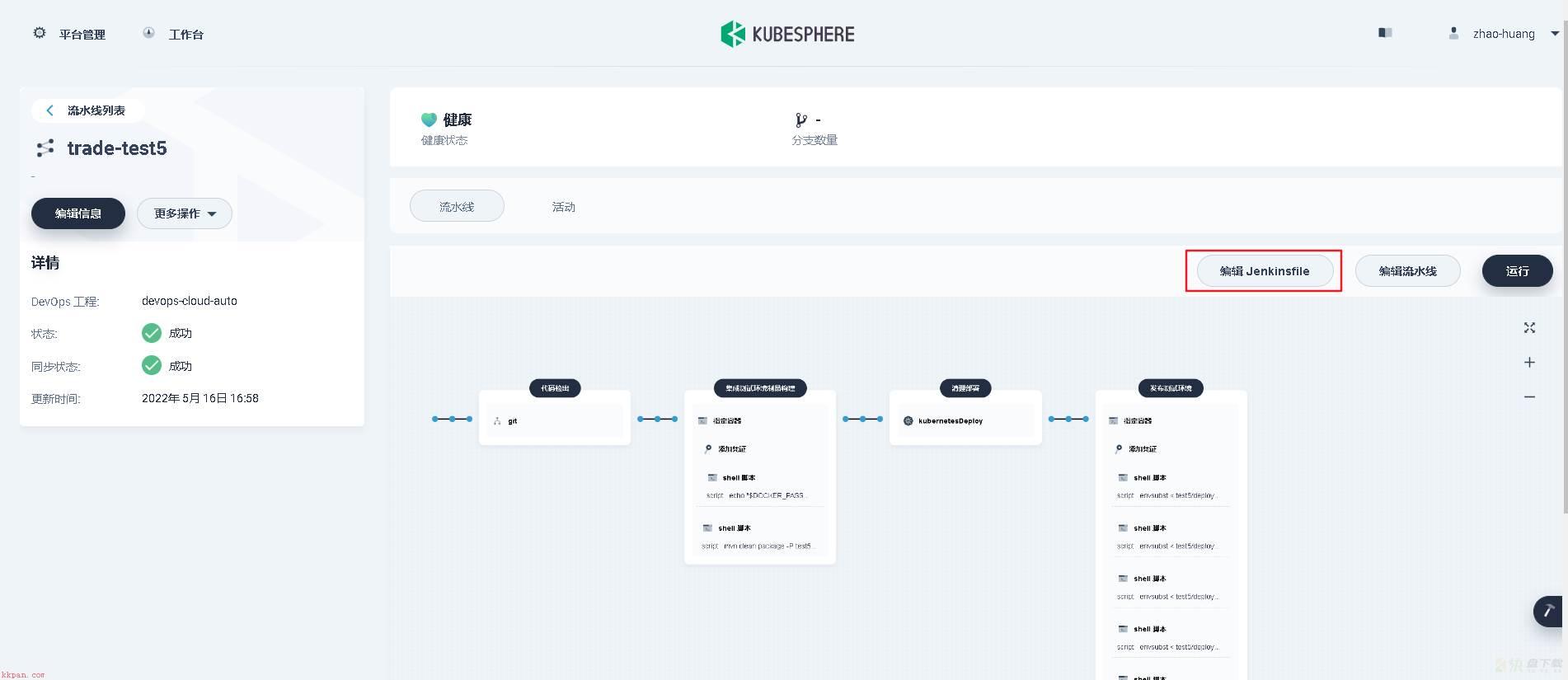

注意:在构建制品时项目pom文件中镜像源地址仓库名是否在harbor中有创建,没有的话,运行流水线会报错,需要自己创建仓库

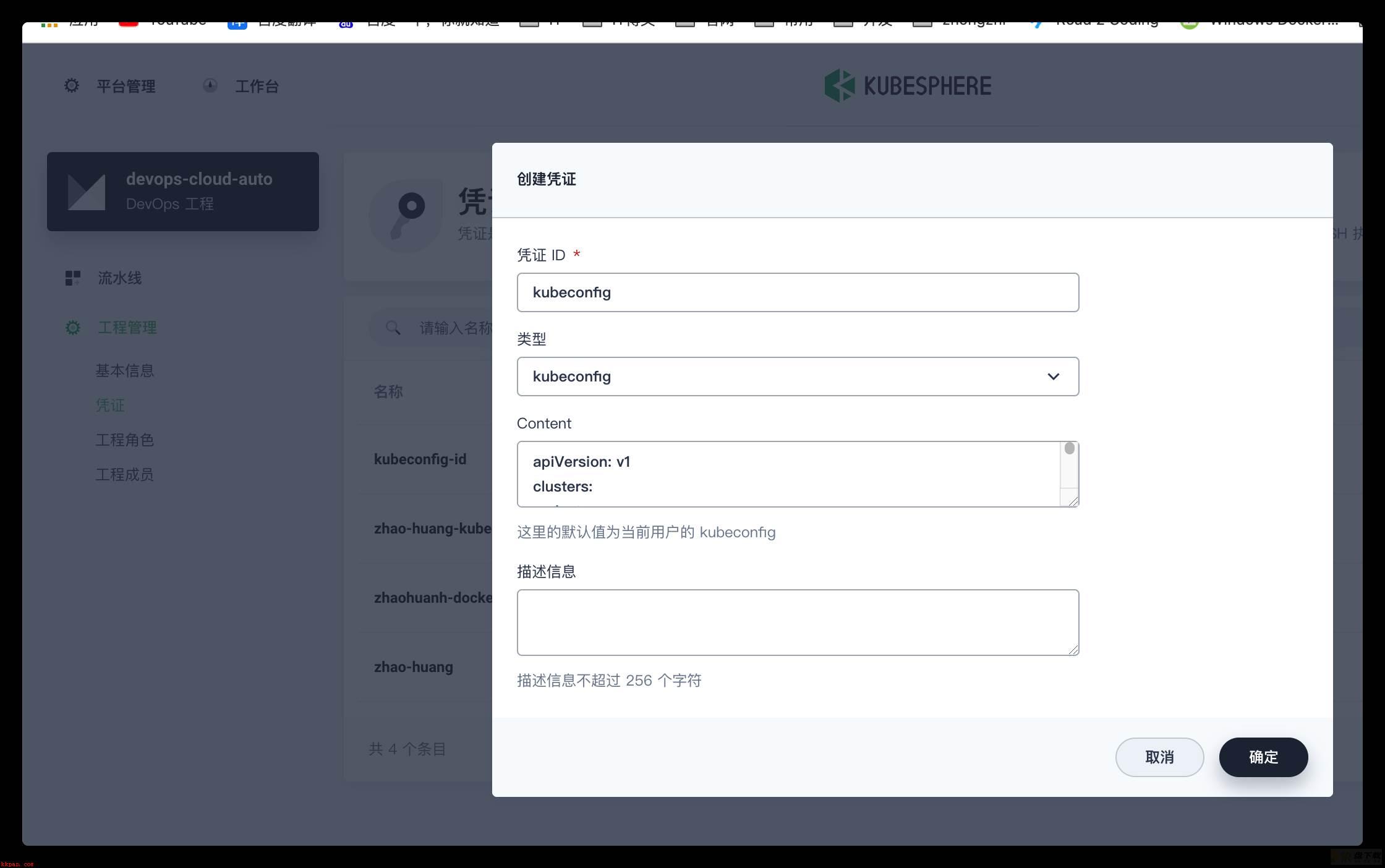

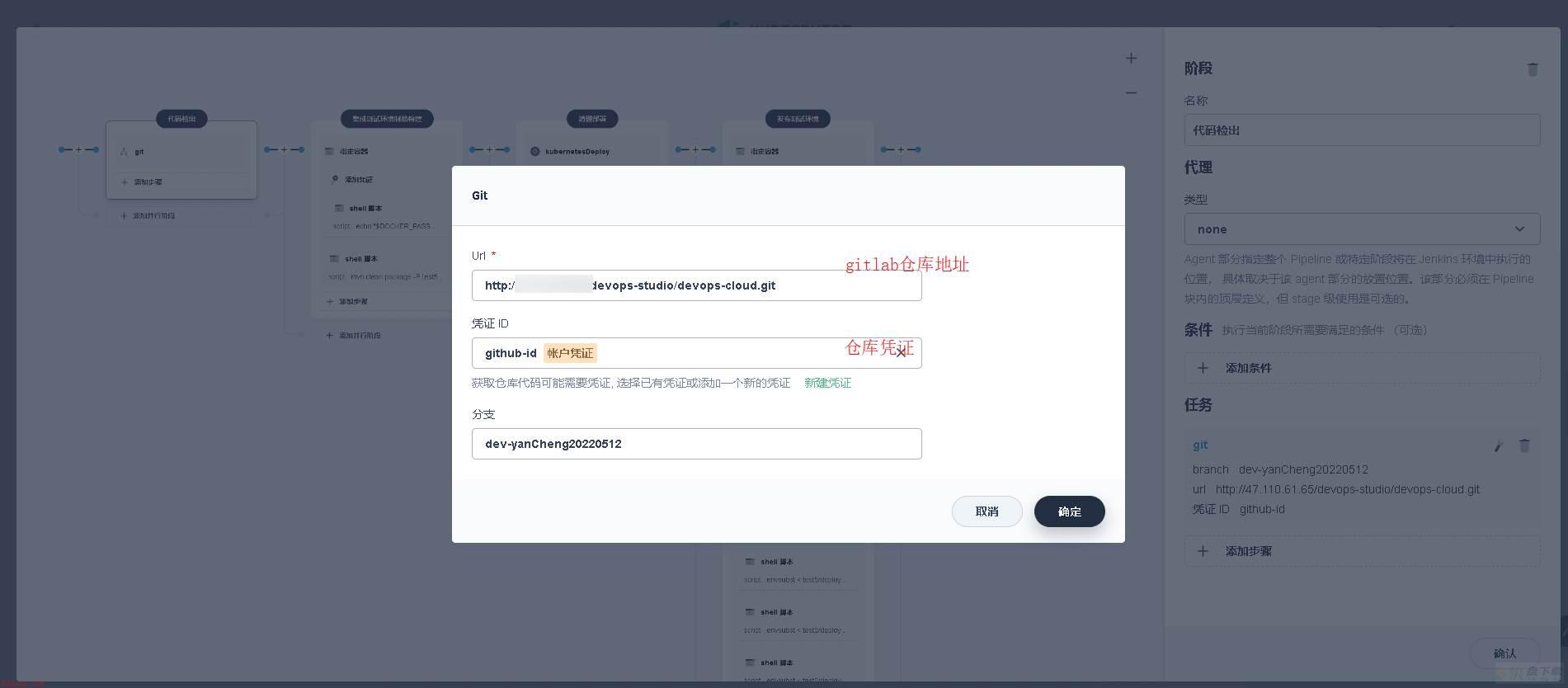

凭证是项目代码仓库地址

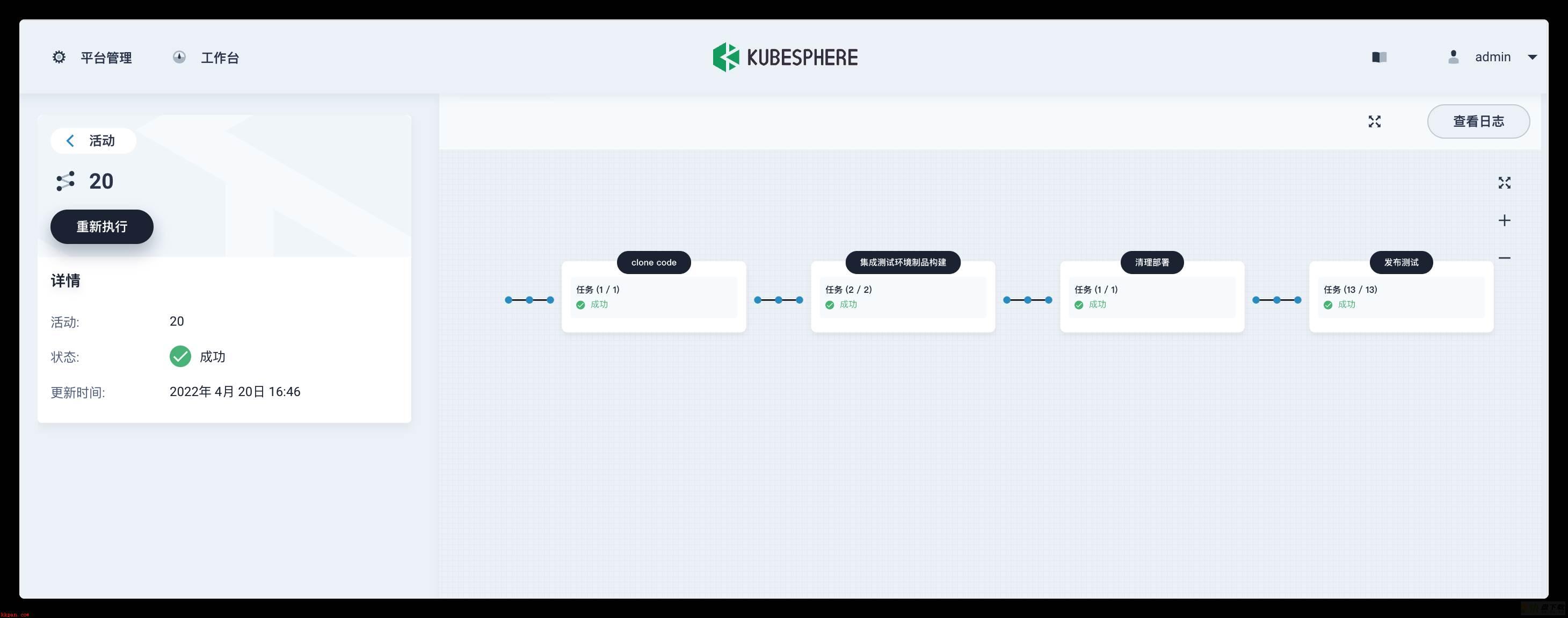

成功,等启动完,查看服务下所有容器的的日志,是否启动完成无报错

注意

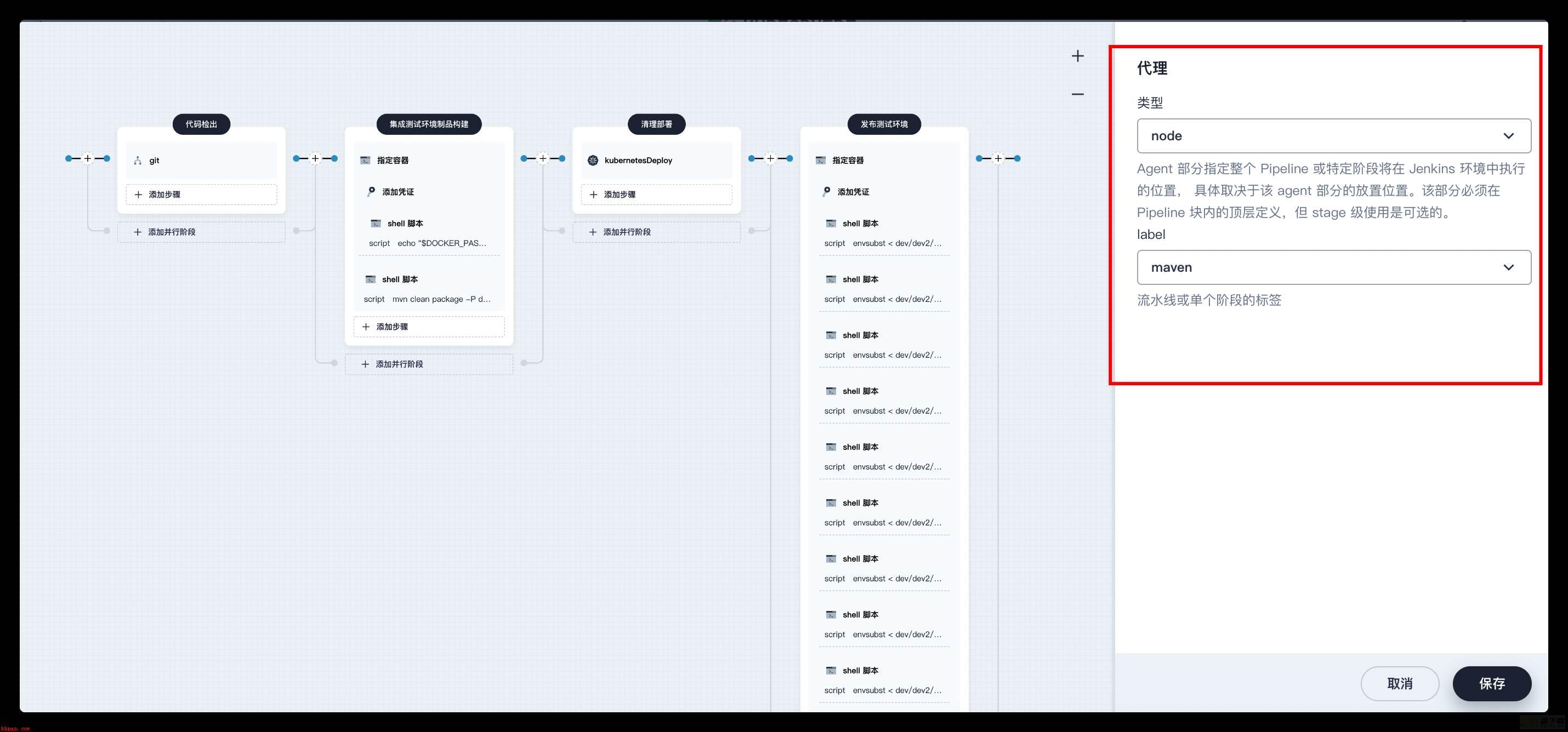

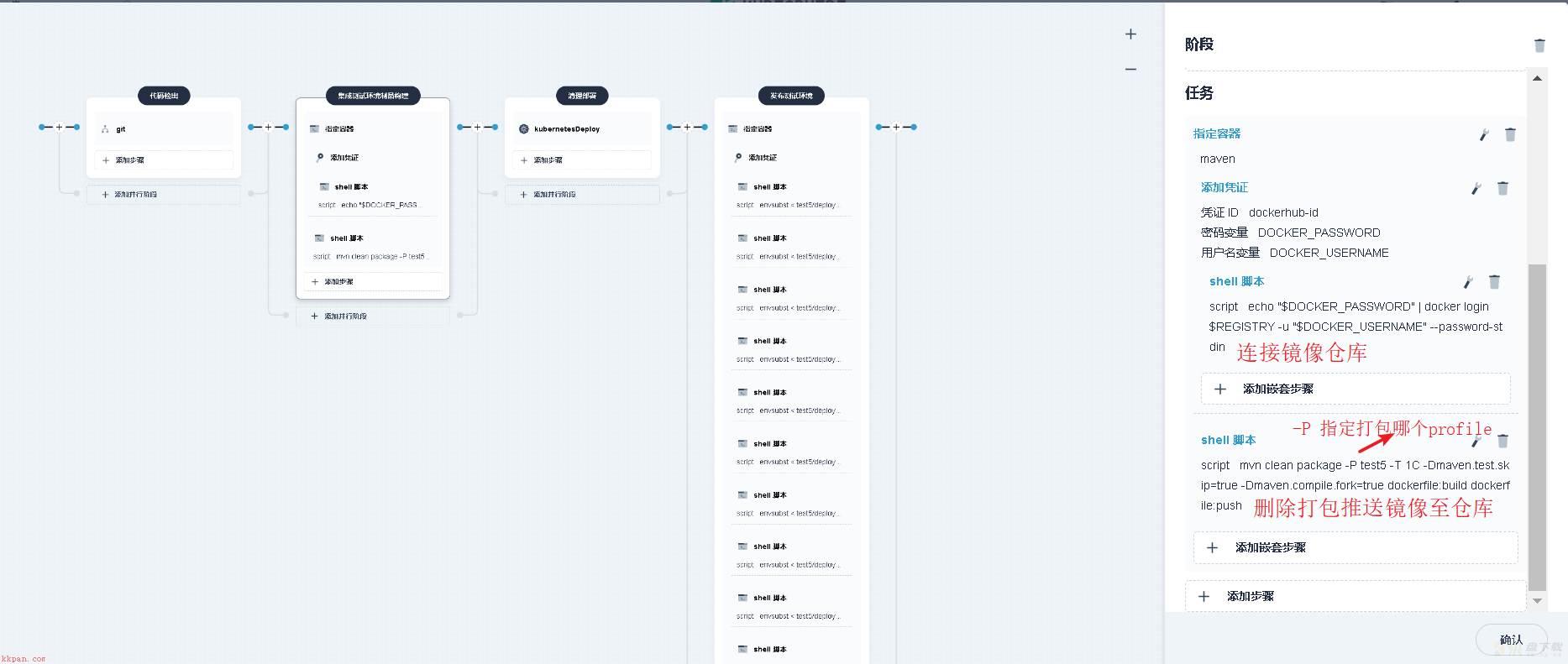

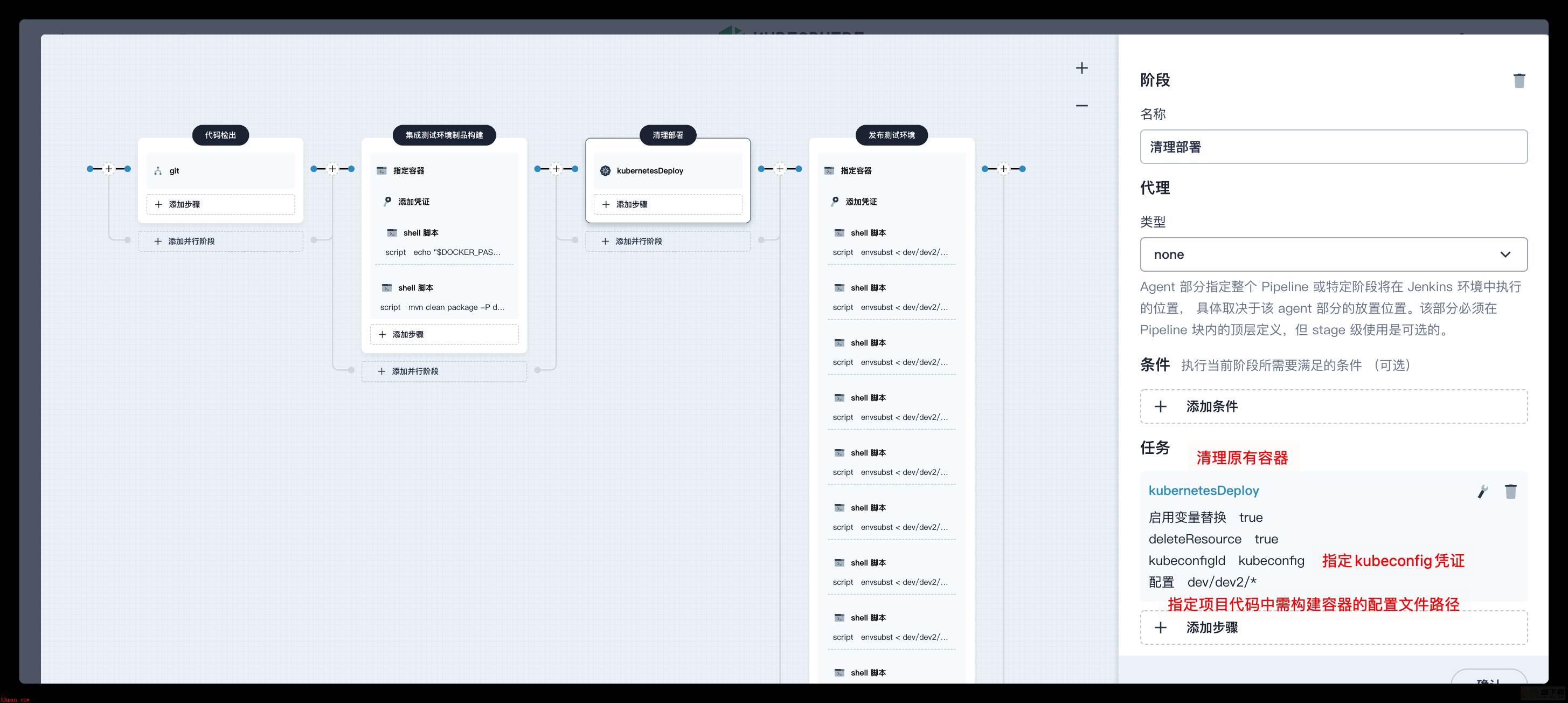

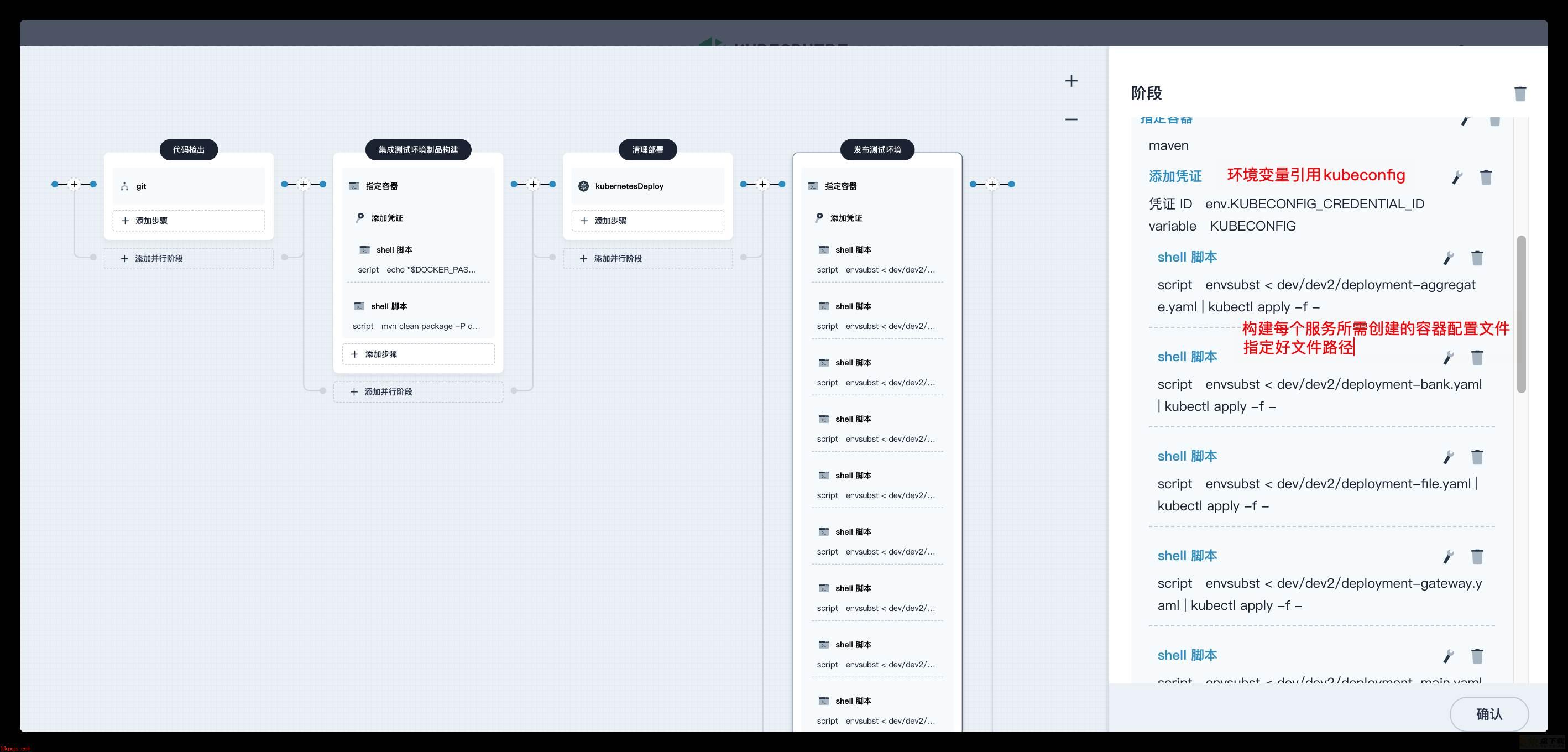

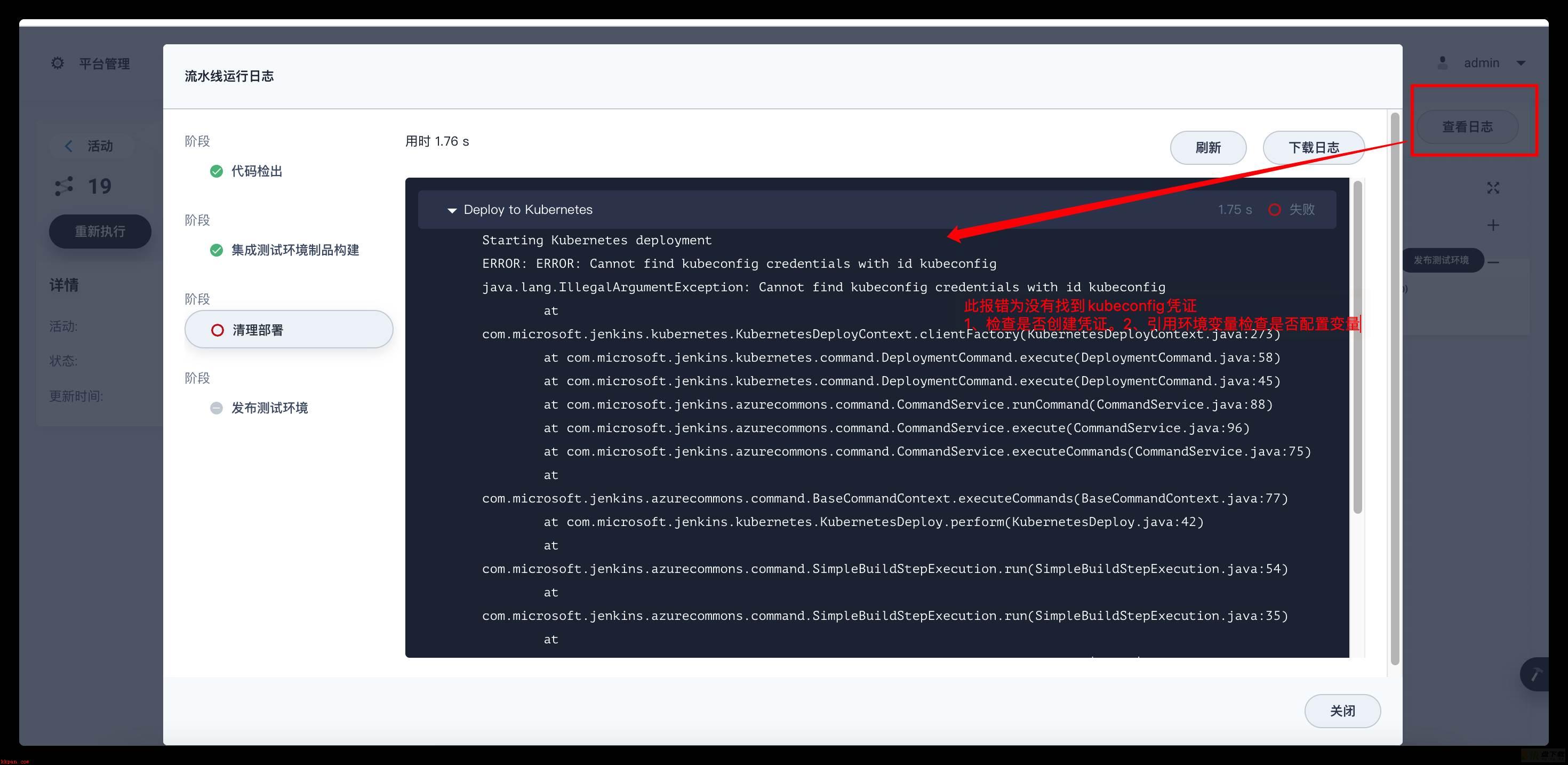

environment环境变量需要调整 凭证需要调整

pipeline {

agent {

node {

label 'maven'

}

}

stages {

stage('代码检出') {

agent none

steps {

git(branch: 'dev-yanCheng20220512', url: 'http://IP/devops-studio/devops-cloud.git', credentialsId: 'github-id', changelog: true, poll: false)

}

}

stage('集成测试环境制品构建') {

agent none

steps {

container('maven') {

withCredentials([usernamePassword(credentialsId : 'dockerhub-id' ,passwordVariable : 'DOCKER_PASSWORD' ,usernameVariable : 'DOCKER_USERNAME' ,)]) {

sh 'echo "$DOCKER_PASSWORD" | docker login $REGISTRY -u "$DOCKER_USERNAME" --password-stdin'

}

sh 'mvn clean package -P test5 -T 1C -Dmaven.test.skip=true -Dmaven.compile.fork=true dockerfile:build dockerfile:push'

}

}

}

stage('清理部署') {

agent none

steps {

kubernetesDeploy(enableConfigSubstitution: true, deleteResource: true, kubeconfigId: 'kubeconfig-id', configs: 'test5/**')

}

}

stage('发布测试环境') {

agent none

steps {

container('maven') {

withCredentials([

kubeconfigFile(

credentialsId: env.KUBECONFIG_CREDENTIAL_ID,

variable: 'KUBECONFIG')

]) {

sh 'envsubst < test5/deployment-aggregate.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-bank.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-file.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-gateway.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-main.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-object.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-process.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-system.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-websocket.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-workflow.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-analysis.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-sign.yaml | kubectl apply -f -'

sh 'envsubst < test5/deployment-message.yaml | kubectl apply -f -'

}

}

}

}

}

environment {

DOCKER_CREDENTIAL_ID = 'dockerhub-id'

KUBECONFIG_CREDENTIAL_ID = 'kubeconfig-id'

REGISTRY = 'IP:8088'

}

}

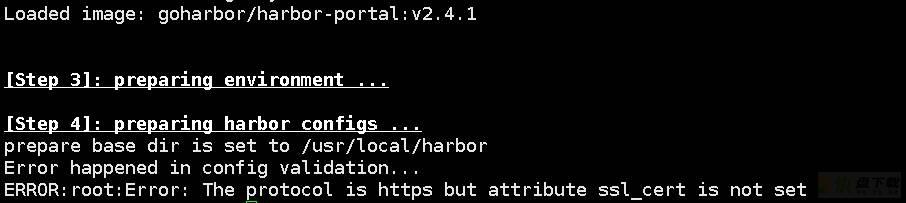

原因分析:顾名思义,一看就知道,https参数未设置,而本就不需要用https 解决方案:配置文件中的https注释掉,注释掉即可

原因分析:之前关闭防火墙之后docker没有重启, 解决方案:执行以下命令重启docker即可 service docker restart

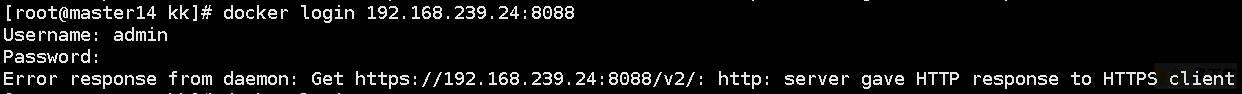

原因分析:Docker Registry 交互默认使用的是 HTTPS,但是搭建私有镜像默认使用的是 HTTP 服务,所以与私有镜像交互时出现以下错误。 解决方案:docker系统服务添加安全进程

vi /etc/docker/daemon.json填入insecure-registries:

{

"registry-mirrors": [

"https://sq9p56f6.mirror.aliyuncs.com"

],

"insecure-registries": ["192.168.239.24:8088"],

"exec-opts":["native.cgroupdriver=systemd"]

} 原因分析:通过du -h --max-depth=1 / 逐级目录排查,发现/var/lib/docker目录文件过大 解决方案:转移数据修改docker默认存储位置 或者 搞一个外部存储

(1)转移数据修改docker默认存储位置

#停止docker服务 systemctl stop docker #创建新的docker目录,执行命令df -h,找一个大的磁盘 mkdir -p /app/docker/lib #迁移/var/lib/docker目录下面的文件到/app/docker/lib rsync -avz /var/lib/docker/ /app/docker/lib/ #配置 /usr/lib/systemd/system/docker.service vi /usr/lib/systemd/system/docker.service #重启docker systemctl daemon-reload systemctl restart docker systemctl enable docker

(2)确认Docker Root Dir修改是否已经生效

[root@node24 docker]# docker info ... Docker Root Dir: /app/docker/lib/docker Debug Mode (client): false Debug Mode (server): false Registry: https://index.docker.io/v1/ ...

(3)确认之前的镜像是否还在

[root@master24 kk]# docker images REPOSITORY TAG IMAGE ID CREATED SIZE perl latest f9596eddf06f 5 months ago 890MB hello-world latest feb5d9fea6a5 8 months ago 13.3kB 192.168.239.24:8088/library/nginxdemos/hello plain-text 21dd11c8fb7a 8 months ago 22.9MB nginxdemos/hello plain-text 21dd11c8fb7a 8 months ago 22.9MB 192.168.239.24:8088/library/kubesphere/edge-watcher v0.1.0 f3c1c017ccd5 8 months ago 47.8MB kubesphere/edge-watcher v0.1.0 f3c1c017ccd5 8 months ago 47.8MB

(4) 确定容器没问题后删除/var/lib/docker/目录中的文件

rm -rf /var/lib/docker

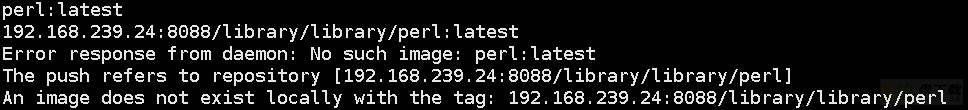

docker里没有该镜像,pull一个然后给个标记推送至私服即可

(1)情况一

docker默认的源为国外官方源,下载速度较慢,改成国内镜像源

vi /etc/docker/daemon.json填入registry-mirrors:

{

"registry-mirrors": [

"https://sq9p56f6.mirror.aliyuncs.com" #这是我自个阿里云的镜像加速器,你可去阿里弄个自己的

],

"insecure-registries": ["192.168.239.24:8088"],

"exec-opts":["native.cgroupdriver=systemd"]

} (2)情况二

harbor出问题了,导致私服连接不上

(3)情况三

config-sample.yaml中配置的私服有问题,导致找不到对应的镜像