;未完待续;持续更新中;

目录

序言

0 存储设备

0.1 机械盘

0.2 SSD

0.3 NVDIMM

1 VFS

1.0 基础组件

1.0.1 iov_iter

1.0.2 iomap

1.1 inode

1.1.1 inode的生命周期

1.2 Dentry

1.2.1 dentry的功能

1.2.2 dentry操作

1.2.3 root dentry

1.2.4 d_splice_alias

1.2.5 lookup

1.2.6 symbol link

1.3 mount

1.3.1 嫁接

1.3.2 loop mount

1.4 page cache

1.4.1 radix tree

1.4.3 mmap

1.4.4 direct IO

1.5 DAX

1.5.1 Pmem

1.5.2 fs/dax

Linux的存储栈包括;

存储栈的组件在设计时;一个重要的考量因素;就是如何更好的使用存储设备;使其发挥出最好的性能;所以;在研究Linux存储栈之前;我们先要了解下;各种存储设备的特点。

我们评估价下磁盘以下性能指标;

Host;Intel(R) Xeon(R) Gold 5218 CPU ; 2.30GHz

HDD;ST2000NM0055-1V4;磁盘容量1.8T

顺序IO;不同IOsize (--bs)

5124K8K16K32K64K128K256K512K1M4M24us30us39us79us157us314us629us1.26ms2.52ms5.04ms20.24msfio --bs=256K --size=512G --rw=read --norandommap --direct=1 --ioengine=libaio --iodepth=1 --runtime=60 --name=test --filename=/dev/sdb

随机IO;不同IO Range ;--size;

32M64M128M256M512M1G2G106us954us3.02ms4.08ms4.63ms5.06ns5.29ms 4G8G16G32G64G128G256G512G1T1.5T5.51ms5.69ms5.85ms6.09ms6.34ms6.78ms7.36ms8.1ms9.44ms10.52msfio --bs=512 --size=1536G --rw=randread --norandommap --direct=1 --ioengine=libaio --iodepth=1 --runtime=60 --name=test --filename=/dev/sdb --group_reporting

通过以上数据;我们可以大致估算;磁盘执行IO主要分成三部分的时间;

所以;这块机械盘的瓶颈有个决定因素;

还是以这个磁盘为例;如果是xfs;默认4个Allocation Group;每个AG的大小512G(最后一个不足512G);不同目录的文件;通常会被分配到不同的AG;当多个目录的文件同时IO时;其范围很可能在256G以上;也就是磁头移动造成的时间大概在7ms;那么;每秒处理的IO数;也就是IOPS最高只能在143;这就是此时盘的瓶颈;此时;IOsize越大;盘的带宽就会越高。

;占坑;

NVDIMM这个名字中包含了该种类型设备的两个特性;

即NVDIMM指的是一种使用DIMM接口的非易失性存储设备;

Intel近些年推出的Optane DC PMM就是其中的一种;其基于3D XPoint存储技术;同样的存储技术还基于NVMe接口实现了Optane SSD;其实我们也可以给硬盘实现DIMM接口;只不过成本和性能就看不过去了。

Optane DCPMM第一代产品代号为Apache Pass;也就是我们常说的AEP;其第二代产品代号为Barlow Pass;不过;网传英特尔将终止基于 3D XPoint 技术的 Optane 业务。

NVDIMM的性能数据来自网络上的两篇Paper《Basic Performance Measurements of the Intel Optane DC Persistent Memory Module》《Initial Experience with 3D XPoint Main Memory》;感兴趣的同学可以到https://download.csdn.net/download/home19900111/86736994https://download.csdn.net/download/home19900111/86736994

Initial Experience with 3D XPoint Main Memoryhttps://download.csdn.net/download/home19900111/86737051

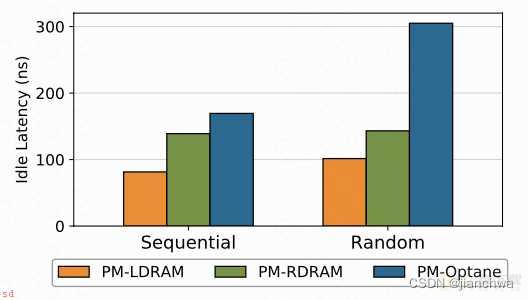

图中的LDRAM的意思是本地内存访问;RDRAM跨numa内存访问;

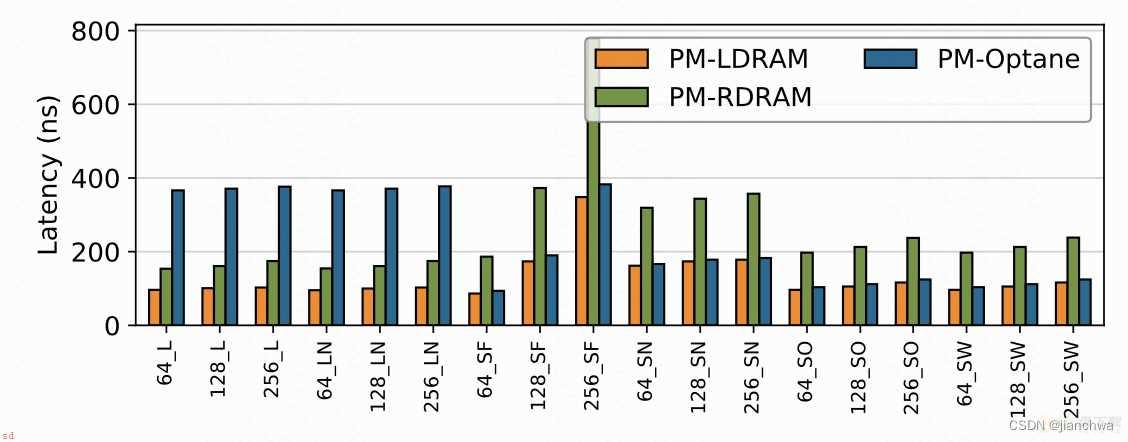

L stands for regular loads,

LN stands for non-temporal loads,

SF stands for stores followed by clflush

SN stands for non- temporal stores

SO stands for stores followed by clflushopt

SW stands for stores followed by clwb.

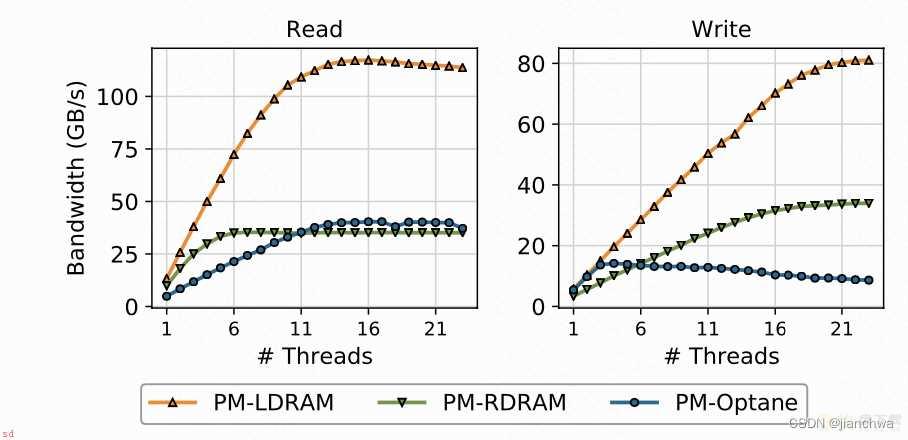

从图中的数据来看;虽然Opane的数据与真正的内存相比依然有些差距;但是已经基本在一个数量级;结合《Initial Experience with 3D XPoint Main Memory》中的结论;

The memory access performance of 3D XPoint is mod- estly lower than that of DRAM. Compared to DRAM, 3D XPoint’s read performance can be up to 2.5x slower, and its write performance can be up to 2.3x slower.

The write performance of 3D XPoint is quite similar to its read performance. Writes are at most 2.3x slower than reads. The asymmetric effect of NVM reads and writes are modest.

Persisting writes to 3D XPoint incur drastic cost for sequential and random writes. The slowdowns can be at least 10.7x and 2.9x for sequential writes and random writes, respectively.

The performance of 3D XPoint degrades as an application accesses more data. We suspect that techniques such as caching are exploited in the 3D Xpoint modules to improve performance. This effect is less significant when the size of data visited is 32GB or larger for the 256GB 3D XPoint module.

与HDD或者Nand SSD对比;这无疑是;史诗级;的性能提升;但是;在实际使用中;我们发现AEP最大的问题是;由于其使用load/store指令直接访问;无法实现异步;这造成相关应用的CPU利用率显著升高。

Intel后来推出了基于FPGA的硬件加速模块;使用内部的几百上千的并发单元访问AEP。

不过;现阶段AEP仍然无法真正在引发计算系统革命;当NVDIMM的性能达到DDR且成本可控时;才会真正颠覆现有的架构。

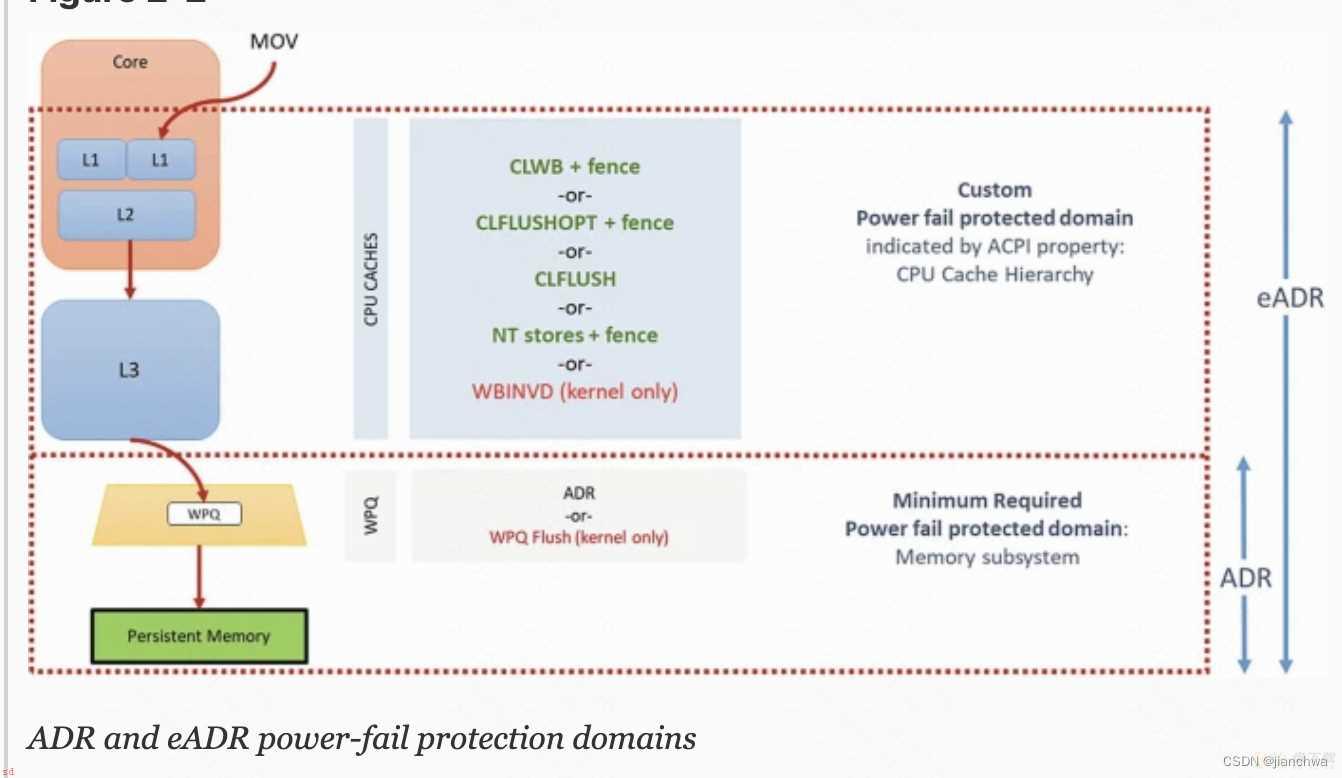

关于nvdimm的持久化编程;参考掉电保护域;

Asynchronous DRAM Refresh (ADR) is a feature supported on Intel products which flushes the write-protected data buffers and places the DRAM in self-refresh. This process is critical during a power loss event or system crash to ensure the data is in a safe and consistent state on persistent memory. By default, ADR does not flush the processor caches. A platform that supports ADR only includes persistent memory and the memory controller’s write pending queues within the persistence domain. This is the reason data in the CPU caches must be flushed by the application using the CLWB, CLFLUSHOPT, CLFLUSH, non-temporal stores, or WBINVD machine instructions.

Enhanced Asynchronous DRAM Refresh (eADR) requires that a non-maskable interrupt (NMI) routine be called to flush the CPU caches before the ADR event can begin. Applications running on an eADR platform do not need to perform flush operations because the hardware should flush the data automatically, but they are still required to perform an SFENCE operation to maintain write order correctness. Stores should be considered persistent only when they are globally visible, which the SFENCE guarantees.

实现持久化编程的几个关键指令;

参考

What is iov_iterhttps://jianchwa.github.io/fs/xfs_v2.html#iov_iter

我们首先看下iomap结构

#define IOMAP_HOLE 0 /* no blocks allocated, need allocation */

#define IOMAP_DELALLOC 1 /* delayed allocation blocks */

#define IOMAP_MAPPED 2 /* blocks allocated at ;addr */

#define IOMAP_UNWRITTEN 3 /* blocks allocated at ;addr in unwritten state */

#define IOMAP_INLINE 4 /* data inline in the inode */

struct iomap {

u64 addr; /* disk offset of mapping, bytes */

loff_t offset; /* file offset of mapping, bytes */

u64 length; /* length of mapping, bytes */

u16 type; /* type of mapping */

u16 flags; /* flags for mapping */

struct block_device *bdev; /* block device for I/O */

...

const struct iomap_page_ops *page_ops;

};iomap描述的是从文件的(iomap.offset, iomap.lengh)到block设备上(iomap.addr, iomap.length)的映射;其意义类似ext2的block mapping和ext4的extent;参考generic_perform_write();执行aops->write_begin()是以page为单位的;而xfs_file_write_iter()使用的iomap_file_bufferred_write();其调用iomap_start操作的是一个范围;这对于大块的连续IO可以提高代码执行效率。

我们看下iomap的标准操作流程;

dax_iomap_rw()

---

while ((ret = iomap_iter(&iomi, ops)) > 0)

---

(1) iomap_end

(2) advance iter by iter.processed

(3) iomap_begin, based on iter.inode, iter.pos, iter.len, get iomap.addr, iomap.offset, iomap.length

---

iomi.processed = dax_iomap_iter(&iomi, iter);

---

read/write on iomap.addr ; (iomap.offset - iter.pos)

---

---这里需要特别说明的是;iomap_begin返回的iomap范围可能会比需要的要大。

Linux讲究万物皆文件;这个文件就是inode;我们可以将其比作一个c;;类;其内部包含了;

以及其他一些辅助性质的成员。

注;需要注意file和inode的区别;file代表的是一个操作文件的上下文;其中甚至包含了文件操作的偏移;所以;当我们用read/write连续读写文件的时候;不需要传入偏移。

一个inode有两个生命周期的计数;

本小节;我们主要关注i_count;即inode cache的生命周期。

注;元数据填充需要IO;时间不定;所以;内核先创建一个I_NEW状态的inode在inode cache中占个坑;其他所有想要访问该inode的任务都需要等待I_NEW状态的解除。

ext2_iget()

---

inode = iget_locked(sb, ino);

if (!(inode->i_state & I_NEW))

return inode;

ei = EXT2_I(inode);

ei->i_block_alloc_info = NULL;

//这个步骤需要跟存储设备做IO

raw_inode = ext2_get_inode(inode->i_sb, ino, &bh);

// Fill the in-core inode from on-disk inode

unlock_new_inode(inode);

---

iget_locked()

---

spin_lock(&inode_hash_lock);

inode = find_inode_fast(sb, head, ino);

spin_unlock(&inode_hash_lock);

if (inode) {

...

//在此处等待I_NEW被清除掉

wait_on_inode(inode);

return inode;

}

inode = alloc_inode(sb);

if (inode) {

struct inode *old;

spin_lock(&inode_hash_lock);

/* We released the lock, so.. */

old = find_inode_fast(sb, head, ino);

if (!old) {

inode->i_ino = ino;

spin_lock(&inode->i_lock);

inode->i_state = I_NEW;

hlist_add_head(&inode->i_hash, head);

spin_unlock(&inode->i_lock);

inode_sb_list_add(inode);

spin_unlock(&inode_hash_lock);

/* Return the locked inode with I_NEW set, the

* caller is responsible for filling in the contents

*/

return inode;

}

...

}

---

iput_final()

---

if (op->drop_inode)

drop = op->drop_inode(inode);

else

drop = generic_drop_inode(inode);

// !inode->i_nlink || inode_unhashed(inode);

//引用计数为0之后;将inode放到lru中;等待回收

if (!drop && (sb->s_flags & SB_ACTIVE)) {

inode_add_lru(inode);

spin_unlock(&inode->i_lock);

return;

}

...

//对于已经被文件系统除名的文件;即i_nlink = 0, 不必再保留inode;直接释放掉

inode->i_state |= I_FREEING;

if (!list_empty(&inode->i_lru))

inode_lru_list_del(inode);

spin_unlock(&inode->i_lock);

evict(inode);

}

---super_cache_scan()

-> prune_icache_sb()

-> inode_lru_isolate()

-> dispose_list()Dentry;即Directory Entry-目录项;文件系统使用目录将文件组织成一个树形结构;目录项就是树的节点;它可以指向另外一个目录;即中间节点;也可以指向一个文件;即叶子节点。目录项可以由以下公式总结;

<Directory ; Name> -> Inode Number

本小节;我们分析下dentry在内存中的实现。

dentry在vfs层中;可以提供以下信息;

Hash值的生成;通过函数

full_name_hash() (exported)

hash_name() //返回的hash值和len的一起的值

link_path_walk()

---

hash_len = hash_name(nd->path.dentry, name);

---

在计算hash值的时候引入了dentry指针

__d_lookup()

---

rcu_read_lock();

hlist_bl_for_each_entry_rcu(dentry, node, b, d_hash) {

if (dentry->d_name.hash != hash)

continue;

spin_lock(&dentry->d_lock);

if (dentry->d_parent != parent)

goto next;

if (d_unhashed(dentry))

goto next;

if (!d_same_name(dentry, parent, name))

goto next;

dentry->d_lockref.count;;;

found = dentry;

spin_unlock(&dentry->d_lock);

break;

next:

spin_unlock(&dentry->d_lock);

}

rcu_read_unlock();

---

内核使用了基于链表解决冲突的hash表;我们看到其中比较了parent指针和name字符串;

dentry.d_parent/d_child/dentry.d_subdirs用来组织dentry的层级关系

相关操作在d_add()中完成;使用d_walk()可以遍历一个目录下的子目录项harklink;即指向一个inode的多个目录项;dentry通过dentry.u.d_alias挂入inode.i_dentry;

__d_find_alias(struct inode *inode)可以返回一个inode的dentry注;不支持对目录进行hardlink操作;具体原因可以参考连接filesystem - Why are hard links not allowed for directories? - Ask Ubuntuz

其中最重要的一点事;环形目录问题;如

mkdir -p /tmp/a/b

cd /tmp/a/b

ln -d /tmp/a lcd /tmp/a/b/l/b/l/b/l/b/l/b ....

这破坏了文件组织的树形结构

hardlink是一个目录项;是树的节点;而symlink是一个文件;是叶子节点;两者都可以实现跳转;但是是线上是有本质区别的。

dentry几种状态及其切换;

从d_alloc()中申请出来的dentry可以被称为;Allocated;;此时;

- 与父目录dentry的层级关系已经建立;

- __d_entry_type(dentry) == 0;即d_is_miss()或者d_is_negative()

- 并没有加入dcache的hash table;即d_unhashed()即该dentry是否被插入了dcache的hash table;主要调用方式;

- __d_add();对应的是通常对应的是lookup操作;比如

ext2_lookup() -> d_splice_alias() -> __d_add()

- __d_move();对应的是rename操作这个状态取决于__d_entry_type(dentry);如果为0;则表示此dentry为空;

d_is_negative()为TRUE

d_flags_for_inode()根据inode的类型返回不同的dentry type;

__d_set_inode_and_type()将inode和type设置给dentry;

d_is_positive()为TRUE

__d_set_inode_and_type()调用方主要有两个;

- __d_add();对应lookup操作;参考ext2_lookup();如果对应的文件

存在;则获取对应的inode;__d_add()会把inode安装到dentry里;

否则inode为空;此时被加入到hash table里的dentry就是negative的;

- __d_instantiate();针对上一步中;negative的dentry;我们通过

这个接口;给dentry安装inode;之后;inode变为positive;常见的

场景包括;link、create、mkdir注;dcache会故意创建一些negative的dentry;这样可以迅速判断一个文件不存在;而不用执行文件系统的lookup;参考 The value of negative dentries [LWN.net]

文件系统的root inode和挂载目录的dentry是怎样的关系呢?参考函数

follow_managed()

---

struct vfsmount *mounted = lookup_mnt(path);

if (mounted) {

dput(path->dentry);

if (need_mntput)

mntput(path->mnt);

path->mnt = mounted;

path->dentry = dget(mounted->mnt_root);

need_mntput = true;

continue;

}

---

struct mount *__lookup_mnt(struct vfsmount *mnt, struct dentry *dentry)

{

struct hlist_head *head = m_hash(mnt, dentry);

struct mount *p;

hlist_for_each_entry_rcu(p, head, mnt_hash)

if (&p->mnt_parent->mnt == mnt && p->mnt_mountpoint == dentry)

return p;

return NULL;

}

do_new_mount()

-> vfs_kern_mount()

-> do_add_mount()

-> graft_tree()

-> attach_recursive_mnt()

-> commit_tree()

-> __attach_mnt()

static void __attach_mnt(struct mount *mnt, struct mount *parent)

{

hlist_add_head_rcu(&mnt->mnt_hash,

m_hash(&parent->mnt, mnt->mnt_mountpoint));

list_add_tail(&mnt->mnt_child, &parent->mnt_mounts);

}从中我们可以得到如下;

文件系统的inode.lookup方法大多使用了d_splice_alias();参考ext2_lookup、ext4_lookup和xfs_vn_lookup等;其中对directory的处理;比较让人困惑;为什么会存在;inode已经有dentry;的情况?因为vfs_rename已经通过d_move对dentry进行了修改。

通过查看d_splice_alias的commit信息;发现了下面这个commit;

commit b5ae6b15bd73e35b129408755a0804287a87e041

Author: Al Viro <viro;zeniv.linux.org.uk>

Date: Sun Oct 12 22:16:02 2014 -0400merge d_materialise_unique() into d_splice_alias()

Signed-off-by: Al Viro <viro;zeniv.linux.org.uk>

当前的d_splice_alias还包括了原d_materialise_unique的一部分;通过这个commit;我们看看原d_splice_alias;

struct dentry *d_splice_alias(struct inode *inode, struct dentry *dentry)

{

- struct dentry *new = NULL;

-

if (IS_ERR(inode))

return ERR_CAST(inode);

- if (inode && S_ISDIR(inode->i_mode)) {

- spin_lock(&inode->i_lock);

- new = __d_find_any_alias(inode);

- if (new) {

- if (!IS_ROOT(new)) {

- spin_unlock(&inode->i_lock);

- dput(new);

- iput(inode);

- return ERR_PTR(-EIO);

- }

// 如果dentry位于new树下;这会造成loop

- if (d_ancestor(new, dentry)) {

- spin_unlock(&inode->i_lock);

- dput(new);

- iput(inode);

- return ERR_PTR(-EIO);

- }

- write_seqlock(&rename_lock);

- __d_move(new, dentry, false);

- write_sequnlock(&rename_lock);

- spin_unlock(&inode->i_lock);

- security_d_instantiate(new, inode);

- iput(inode);

- } else {

- /* already taking inode->i_lock, so d_add() by hand */

- __d_instantiate(dentry, inode);

- spin_unlock(&inode->i_lock);

- security_d_instantiate(dentry, inode);

- d_rehash(dentry);

- }

- } else {

- d_instantiate(dentry, inode);

- if (d_unhashed(dentry))

- d_rehash(dentry);

- }

- return new;

-}

-EXPORT_SYMBOL(d_splice_alias);

所以;现版本中d_splice_alias令人费解的部分;即__d_unalias;主要来自_materialise_unique;而后者的主要使用者是NFS client;NFS是网络共享文件系统;但是它是弱一致性;参考连接;nfs-cache-coherence.txt · GitHub

其中关于dentry cache一致性;有以下描述;

Directory entry caching

The Linux NFS client caches the result of all NFS LOOKUP requests. If the requested directory entry exists on the server, the result is referred to as a positive lookup result. If the requested directory entry does not exist on the server (that is, the server returned ENOENT), the result is referred to as negative lookup result.To detect when directory entries have been added or removed on the server, the Linux NFS client watches a directory;s mtime. If the client detects a change in a directory;s mtime, the client drops all cached LOOKUP results for that directory. Since the directory;s mtime is a cached attribute, it may take some time before a client notices it has changed. See the descriptions of the acdirmin, acdirmax, and noac mount options for more information about how long a directory;s mtime is cached.

Caching directory entries improves the performance of applications that do not share files with applications on other clients. Using cached information about directories can interfere with applications that run concurrently on multiple clients and need to detect the creation or removal of files quickly, however. The lookupcache mount option allows some tuning of directory entry caching behavior.

Before kernel release 2.6.28, the Linux NFS client tracked only positive lookup results. This permitted applications to detect new directory entries created by other clients quickly while still providing some of the performance benefits of caching. If an application depends on the previous lookup caching behavior of the Linux NFS client, you can use lookupcache=positive.

If the client ignores its cache and validates every application lookup request with the server, that client can immediately detect when a new directory entry has been either created or removed by another client. You can specify this behavior using lookupcache=none. The extra NFS requests needed if the client does not cache directory entries can exact a performance penalty. Disabling lookup caching should result in less of a performance penalty than using noac, and has no effect on how the NFS client caches the attributes of files.

结合下划线部分;参考d_splice_alias中关于__d_unalias的部分;内核并没有直接将目录下的所有的dentry全部drop掉;而是在发现存在对于同一个directory inode已经存在dentry的时候;使用__d_unalias替换掉。

IS_ROOT的分支;也来自NFS;参考函数d_obtain_alias();具体原因待研究。。。

参考连接;

Dcache scalability and RCU-walk [LWN.net]

Pathname lookup in Linux [LWN.net]

RCU-walk: faster pathname lookup in Linux [LWN.net]

path-lookup.txt [LWN.net]

Scaling dcache with RCU | Linux Journal

当我们尝试打开文件/home/will/Desktop/linux-stable时;内核实际上把它分解成;

1. lookup ;/;

2. lookup ;home; in dentry of ;/;

3. lookup ;will; in dentry of ;/home;

4. lookup ;Desktop; in dentry of ;/home/will;

5. lookup ;linux-stable; in dentry of ;/home/will/Desktop;dcache lookup只会保证每一步的lookup操作的原子性;而不会保证整个对/home/will/Desktop/linux-stable的lookup的原子性;换句话说;我们最终得到的可能是/home/kate/Desktop/linux-stable。

lookup操作分为两种ref-walk和rcu-walk两种;ref和rcu其实对应的是;如何保证在执行操作期间dentry结构不会被释放;尤其是rcu;

The cases where rcu-walk cannot continue are: * NULL dentry (ie. creat or negative lookup) * parent with ->d_op->d_hash * parent with d_inode->i_op->permission or ACLs * Following links * Dentry with ->d_op->d_revalidate

为什么seqcount_t的扩展性更好? 其中没有任何原子操作;

typedef struct seqcount {

unsigned sequence;

} seqcount_t;

static inline unsigned __read_seqcount_begin(const seqcount_t *s)

{

unsigned ret;

repeat:

ret = READ_ONCE(s->sequence);

if (unlikely(ret & 1)) {

cpu_relax();

goto repeat;

}

return ret;

}

static inline void raw_write_seqcount_begin(seqcount_t *s)

{

s->sequence;;;

smp_wmb();

}

static inline void raw_write_seqcount_end(seqcount_t *s)

{

smp_wmb();

s->sequence;;;

}Note: 内核之所以使用list处理hashtable的冲突;是因为list可以实现rcu版;rcu下的lookup操作扩展性更好。

当dcache中没有找对对应dentry;或者negative的dentry;会进入__lookup_slow;其主要分成两个步骤;

这里需要特别说明的是;因为文件系统的lookup操作是IO操作;可能速度较慢;并行的其他lookup操作;通过in_lookup_hash的hash lock的同步;会睡眠在d_wait_lookup();

Note: 一个问题是;在lookup期间;所有的任务都会进入到该路径的任务;执行d_alloc();这里是不是有点多余?是不是可以将该negative的dentry ; in_lookup插入到d_hash;这样其他lookup该dentry的任务可以直接等待

symbol link的本质是在一个文件中保存一个路径;其相当于一个文件系统指向文件的指针;

在分析symbol link的处理之前;我们需要首先看下内核是如何解析文件路径的。

;/home/will/Desktop/linux-stable;这样的路径;是完全由内核解析的;具体代码如下;

以下代码已经将部分错误分支处理去掉;以使代码变得更清晰

link_path_walk()

---

while (*name==;/;)

name;;;

if (!*name)

return 0;

for(;;) {

//对路径中 .. 和 . 的处理;类似于/home/will/Deskop/../Download

type = LAST_NORM;

if (name[0] == ;.;) switch (hashlen_len(hash_len)) {

case 2:

if (name[1] == ;.;) {

type = LAST_DOTDOT;

nd->flags |= LOOKUP_JUMPED;

}

break;

case 1:

type = LAST_DOT;

}

// hash_name函数会以;/;作为name的结尾来返回len

hash_len = hash_name(nd->path.dentry, name);

nd->last.hash_len = hash_len;

nd->last.name = name;

nd->last_type = type;

// 指向路径的下一个元素

name ;= hashlen_len(hash_len);

if (!*name)

goto OK;

// 路径中加几个;/;都没有关系

do {

name;;;

} while (unlikely(*name == ;/;));

if (unlikely(!*name)) {

} else {

//处理当前的路径元素

/* not the last component */

err = walk_component(nd, WALK_FOLLOW | WALK_MORE);

}

...

}

}简单来说;就是依次对path的每一个元素调用walk_component()。

引入symbol link之后;路径的某一个元素;会变成一个另外一个路径;甚至;symlink路径的一部分也可能是另外一个symlink;所以;这里引入了一个stack的概念;例如;

/home/will/Desktop/sourcecode/linux-stable

|

;--> /mnt/disk1/AAAA/sourcecode

|

;--> /mnt/disk2/BBBB

路径的解析过程为

stack[0] home -> will -> Dektop -> sourcecode ;-> linux-stable

____________/__________ |

/ |

stack[1] mnt -> disk1 -> AAAA |

____________/__________ |

/ |

mnt -> disk2 -> BBBB -;

与symlink stack有关的代码为;

扩展stack;

push stack;

walk_component() -> step_into() -> pick_link() -> nd_alloc_stack()

link_path_walk()

---

if (err) {

// 此处调用的是文件系统的get_link方法

const char *s = get_link(nd);

...

if (unlikely(!s)) {

} else {

nd->stack[nd->depth - 1].name = name;

name = s;

continue;

}

}

---

pop stack:

link_path_walk()

---

if (unlikely(!*name)) {

OK:

/* pathname body, done */

if (!nd->depth)

return 0;

// Stack is popped here

name = nd->stack[nd->depth - 1].name;

/* trailing symlink, done */

if (!name)

return 0;

/* last component of nested symlink */

err = walk_component(nd, WALK_FOLLOW);

} else {

/* not the last component */

err = walk_component(nd, WALK_FOLLOW | WALK_MORE);

}

---

NO WALK_MORE

walk_component() -> step_into() -> put_link() -> nd->depth--

关于mount有两个关键点需要掌握;

另外;有两个看起来比较相似的结构体;mount和vfsmount;具体来源可以参考;

vfs: start hiding vfsmount guts series

Almost all fields of struct vfsmount are used only by core VFS (and

a fairly small part of it, at that). The plan: embed struct vfsmount

into struct mount, making the latter visible only to core parts of VFS.

Then move fields from vfsmount to mount, eventually leaving only

mnt_root/mnt_sb/mnt_flags in struct vfsmount. Filesystem code still

gets pointers to struct vfsmount and remains unchanged; all such

pointers go to struct vfsmount embedded into the instances of struct

mount allocated by fs/namespace.c. When fs/namespace.c et.al. get

a pointer to vfsmount, they turn it into pointer to mount (using

container_of) and work with that.

This is the first part of series; struct mount is introduced,

allocation switched to using it.

Signed-off-by: Al Viro <viro;zeniv.linux.org.uk>

总之;vfsmount和mount结构可以看成是一个。

实现目录树嫁接的关键是__lookup_mnt()函数;如下;

struct mount *__lookup_mnt(struct vfsmount *mnt, struct dentry *dentry)

{

struct hlist_head *head = m_hash(mnt, dentry);

struct mount *p;

hlist_for_each_entry_rcu(p, head, mnt_hash)

if (&p->mnt_parent->mnt == mnt && p->mnt_mountpoint == dentry)

return p;

return NULL;

}

函数通过vfsmount和dentry指针;找到匹配的mount结构;

path_init()

---

if (*s == ;/;) {

....

// nd->root = current->fs->root

set_root(nd);

// nd->path = nd->root

if (likely(!nd_jump_root(nd)))

return s;

...

} else if (nd->dfd == AT_FDCWD) {

if (flags & LOOKUP_RCU) {

...

} else {

//nd->path = current->fs->pwd

get_fs_pwd(current->fs, &nd->path);

nd->inode = nd->path.dentry->d_inode;

}

return s;

}

---

mount;这是要寻找的挂载点的mount结构;其mnt_parent指向挂载点目录的mount;参考代码;

do_add_mount()

---

parent = real_mount(path->mnt);

err = graft_tree(newmnt, parent, mp);

-> attach_recursive_mnt()

-> mnt_set_mountpoint()

---当从一棵目录树通过挂载点进入另外一棵目录树时;dentry和vfsmount都要切换;

walk_component()

-> follow_managed()

---

if (managed & DCACHE_MOUNTED) {

struct vfsmount *mounted = lookup_mnt(path);

if (mounted) {

dput(path->dentry);

if (need_mntput)

mntput(path->mnt);

path->mnt = mounted;

path->dentry = dget(mounted->mnt_root);

need_mntput = true;

continue;

}

---

-> step_into()

-> path_to_nameidata()

---

nd->path.mnt = path->mnt;

nd->path.dentry = path->dentry;

---

或者叫bind mount;其操作如下;

mkdir /tmp/foo

touch /tmp/foo/will

mkdir /tmp/barmount --bind /tmp/foo /tmp/bar

之后;我们就可以通过/tmp/bar访问/tmp/foo了;但是;使用symlink也能实现类似的功能;如下

mkdir /tmp/foo

touch /tmp/foo/willln -s /tmp/foo /tmp/bar

两者有什么区别呢?我们可以从两者的原理上分析;

Bind mounts can be thought of as a sort of symbolic link at the filesystem level. Using mount --bind, it is possible to create a second mount point for an existing filesystem, making that filesystem visible at a different spot in the namespace. Bind mounts are thus useful for creating specific views of the filesystem namespace; one can, for example, create a bind mount which makes a piece of a filesystem visible within an environment which is otherwise closed off with chroot().

我们先看下chroot是如何实现的;

修改task_struct.fs.root

ksys_chroot()

---

error = user_path_at(AT_FDCWD, filename, lookup_flags, &path);

set_fs_root(current->fs, &path);

---

mntget(path->mnt);

dget(path->dentry);

spin_lock(&fs->lock);

write_seqcount_begin(&fs->seq);

old_root = fs->root;

fs->root = *path;

write_seqcount_end(&fs->seq);

spin_unlock(&fs->lock);

if (old_root.dentry) {

dput(path->dentry);

mntput(path->mnt);

}

---

---

这个结构会在fork的时候;被子任务继承

copy_process()

-> copy_fs()

-> copy_fs_struct()

---

spin_lock(&old->lock);

fs->root = old->root;

path_get(&fs->root);

fs->pwd = old->pwd;

path_get(&fs->pwd);

spin_unlock(&old->lock);

---

结合解析路径时;如果symlink是绝对路径;在chroot之后;解析出的inode会改变;而bind mount;如果chroot的路径依然原文件系统下;那么vfsmount不会变化;在dentry没有改变的情况下;得到的结果是不变的。

那么bind mount如何实现呢?

do_loopback()

---

// bind mount directory

old = real_mount(old_path.mnt);

// mount point

parent = real_mount(path->mnt);

mnt = clone_mnt(old, old_path.dentry, 0);

---

mnt = alloc_vfsmnt(old->mnt_devname);

...

//增加bind mount directory所在文件系统的sb的引用计数

atomic_inc(&sb->s_active);

mnt->mnt.mnt_sb = sb;

// bind mount的root就是bind mount directory

mnt->mnt.mnt_root = dget(root);

//这个会在set_mount_point的时候再次更新

mnt->mnt_mountpoint = mnt->mnt.mnt_root;

mnt->mnt_parent = mnt;

lock_mount_hash();

list_add_tail(&mnt->mnt_instance, &sb->s_mounts);

unlock_mount_hash();

---

err = graft_tree(mnt, parent, mp);

-> attach_recursive_mnt()

-> mnt_set_mountpoint(dest_mnt, dest_mp, source_mnt);

bind mount实现read only;基于的是mount结构上的RDONLY flag

mount -t bind /home/will/Desktop/sourcecode /mnt/readonly

mount -o remount,ro /mnt/readonly

filename_create()

do_renameat2()

do_unlinkat()

do_last()

....

mnt_want_write()

-> __mnt_want_write()

---

preempt_disable();

mnt_inc_writers(mnt);

...

smp_rmb();

if (mnt_is_readonly(m)) {

mnt_dec_writers(mnt);

ret = -EROFS;

}

preempt_enable();

return ret;

---

本小节我们主要看Page Cache在系统中如何组织和使用;至于其回收和Writeback;可以参考;

Linux 内存管理_jianchwa的博客-CSDN博客_内存管理器

page cache使用radix tree维护;本小节;我们看下radix tree如何实现;并讨论下;使用radix tree的原因。

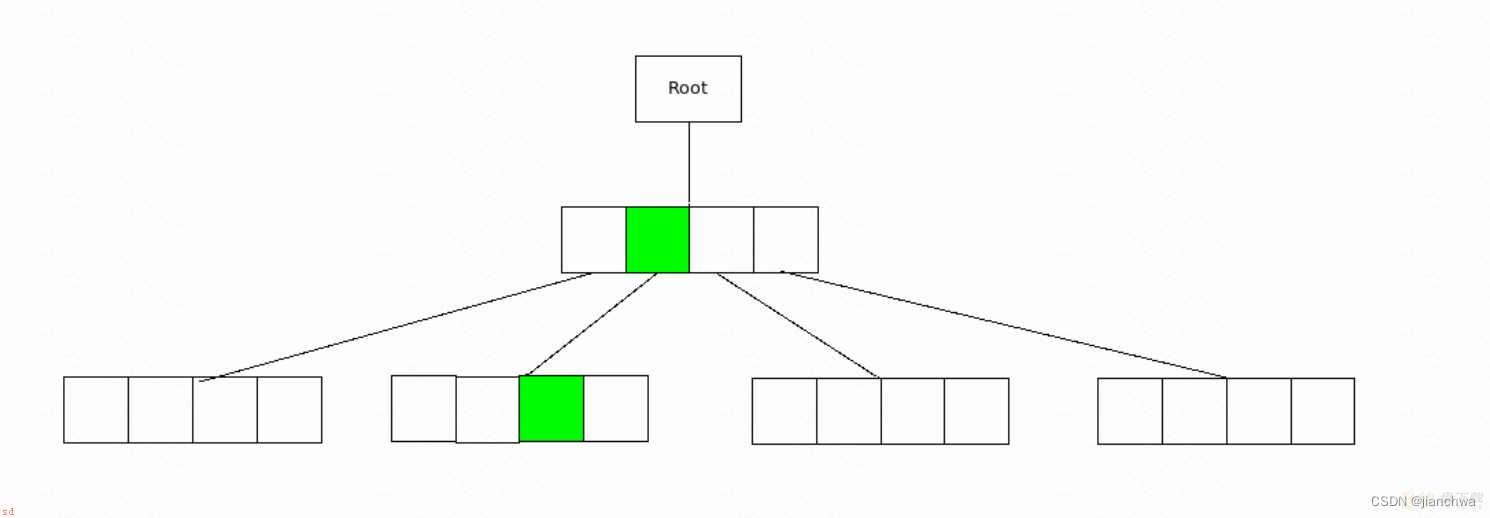

radix tree;本质上是一个数组;容量与每个节点的slot的个数和高度有关;即N^H;如下;

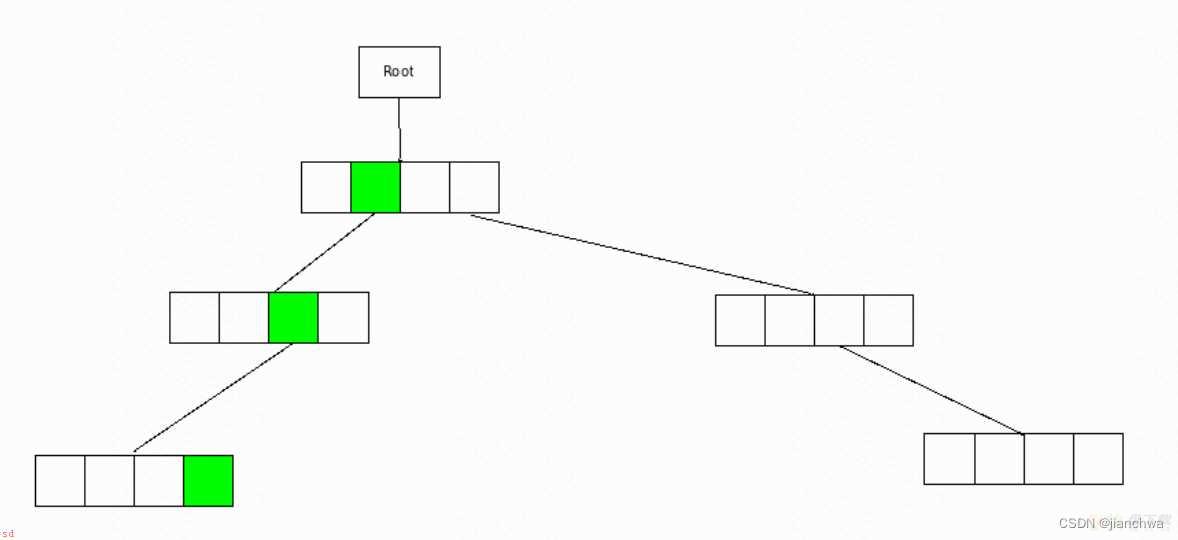

与普通数组不同的是;radix tree支持空洞;如下;

所以;他比一般的数组节省内存;同时;也保留了数组查询块的特点;radix tree不是O1;而是OH;而H = logN(S);其中S是总容量;N越大;H越小。

下面;我们看下Linux内核中;radix tree是如何实现的;

struct radix_tree_node {

unsigned int count;

...

void __rcu *slots[RADIX_TREE_MAP_SIZE];

unsigned long tags[RADIX_TREE_MAX_TAGS][RADIX_TREE_TAG_LONGS];

};

#define RADIX_TREE_MAP_SHIFT (6)

#define RADIX_TREE_MAP_SIZE (1UL << RADIX_TREE_MAP_SHIFT)

#define RADIX_TREE_MAX_TAGS 3

#define RADIX_TREE_TAG_LONGS

((RADIX_TREE_MAP_SIZE ; BITS_PER_LONG - 1) / BITS_PER_LONG)

- 每个node的容量为32,

- count;保存这个node中已经安装的slot的数量;

- tags;每个slot还有3bits的tag;用来保存一些属性信息;比如page cache的三个属性是;

/*

* Radix-tree tags, for tagging dirty and writeback pages within the pagecache

* radix trees

*/

#define PAGECACHE_TAG_DIRTY 0

#define PAGECACHE_TAG_WRITEBACK 1

#define PAGECACHE_TAG_TOWRITE 2

extend的过程大致如下;

Root Root

/ - /

Node0 Node1 -/ Node2

/

Node0 Node1

static int radix_tree_extend(struct radix_tree_root *root, unsigned long index)

{

...

/* Figure out what the height should be. */

height = root->height ; 1;

while (index > radix_tree_maxindex(height))

height;;;

...

do {

if (!(node = radix_tree_node_alloc(root)))

return -ENOMEM;

...

/* Increase the height. */

newheight = root->height;1;

node->count = 1;

node->parent = NULL;

slot = root->rnode;

if (newheight > 1) {

slot = indirect_to_ptr(slot);

slot->parent = node;

}

//将原来的root node安装到新的root node的slot[0]

node->slots[0] = slot;

node = ptr_to_indirect(node);

rcu_assign_pointer(root->rnode, node);

root->height = newheight;

} while (height > root->height);

out:

return 0;

}

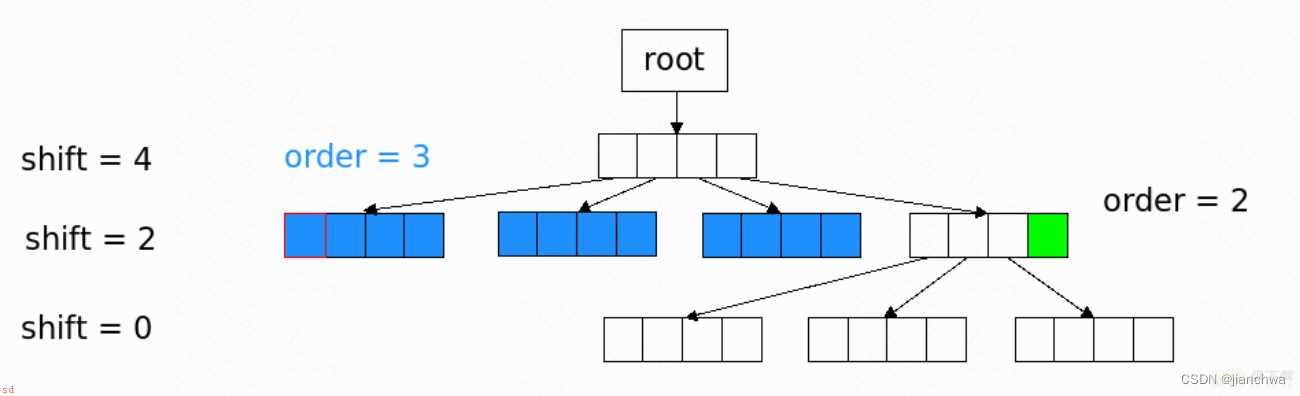

4系内核中;radix tree引入了order的概念;参考下图;

这个版本的radix tree;使用shift替换了height的概念;order代表的是;按2^order对齐的范围;如上图所示的order = 3和order = 2;在order范围内;slot都指向其第一个成员(蓝色红框);同时;子成员都被释放;参考代码:

__radix_tree_create()

---

//遍历的停止条件;比较order

while (shift > order) {

shift -= RADIX_TREE_MAP_SHIFT;

if (child == NULL) {

/* Have to add a child node. */

child = radix_tree_node_alloc(gfp, node, root, shift,

offset, 0, 0);

rcu_assign_pointer(*slot, node_to_entry(child));

if (node)

node->count;;;

} else if (!radix_tree_is_internal_node(child))

break;

/* Go a level down */

node = entry_to_node(child);

offset = radix_tree_descend(node, &child, index);

slot = &node->slots[offset];

}

---

insert_entries()

---

if (node) {

if (order > node->shift)

n = 1 << (order - node->shift);

else

n = 1;

offset = get_slot_offset(node, slot);

} else {

n = 1;

offset = 0;

}

if (n > 1) {

offset = offset & ~(n - 1);

slot = &node->slots[offset];

}

sibling = xa_mk_sibling(offset);

...

for (i = 0; i < n; i;;) {

struct radix_tree_node *old = rcu_dereference_raw(slot[i]);

// 第一个成员保存item;其他保存sibling指针

if (i) {

rcu_assign_pointer(slot[i], sibling);

} else {

rcu_assign_pointer(slot[i], item);

}

//释放掉所有子成员

if (xa_is_node(old))

radix_tree_free_nodes(old);

if (xa_is_value(old))

node->nr_values--;

}

---

page cache在radix tree中存在三个tag;即

标记脏页

__set_page_dirty()

---

xa_lock_irqsave(&mapping->i_pages, flags);

if (page->mapping) { /* Race with truncate? */

account_page_dirtied(page, mapping);

radix_tree_tag_set(&mapping->i_pages,

page_index(page), PAGECACHE_TAG_DIRTY);

}

xa_unlock_irqrestore(&mapping->i_pages, flags);

---

在writeback时;使用该标记将脏页写出

write_cache_pages()

---

if (wbc->sync_mode == WB_SYNC_ALL || wbc->tagged_writepages)

tag = PAGECACHE_TAG_TOWRITE;

else

tag = PAGECACHE_TAG_DIRTY;

if (wbc->sync_mode == WB_SYNC_ALL || wbc->tagged_writepages)

tag_pages_for_writeback(mapping, index, end);

done_index = index;

while (!done && (index <= end)) {

int i;

nr_pages = pagevec_lookup_range_tag(&pvec, mapping, &index, end,

tag);

if (nr_pages == 0)

break;

for (i = 0; i < nr_pages; i;;) {

struct page *page = pvec.pages[i];

done_index = page->index;

lock_page(page);

if (!clear_page_dirty_for_io(page))

goto continue_unlock;

ret = (*writepage)(page, wbc, data);

if (--wbc->nr_to_write <= 0 &&

wbc->sync_mode == WB_SYNC_NONE) {

done = 1;

break;

}

}

pagevec_release(&pvec);

cond_resched();

}

---

dirty转为writeback

__test_set_page_writeback()

---

if (mapping && mapping_use_writeback_tags(mapping)) {

...

xa_lock_irqsave(&mapping->i_pages, flags);

ret = TestSetPageWriteback(page);

if (!ret) {

radix_tree_tag_set(&mapping->i_pages, page_index(page),

PAGECACHE_TAG_WRITEBACK);

...

}

if (!PageDirty(page))

radix_tree_tag_clear(&mapping->i_pages, page_index(page),

PAGECACHE_TAG_DIRTY);

if (!keep_write)

radix_tree_tag_clear(&mapping->i_pages, page_index(page),

PAGECACHE_TAG_TOWRITE);

xa_unlock_irqrestore(&mapping->i_pages, flags);

}

---

__test_set_page_writeback()

test_clear_page_writeback()

等待处于wrieteback状态;即写IO发出但未完成

__filemap_fdatawrite_range()

---

pagevec_init(&pvec);

while (index <= end) {

unsigned i;

nr_pages = pagevec_lookup_range_tag(&pvec, mapping, &index,

end, PAGECACHE_TAG_WRITEBACK);

if (!nr_pages)

break;

for (i = 0; i < nr_pages; i;;) {

struct page *page = pvec.pages[i];

wait_on_page_writeback(page);

ClearPageError(page);

}

pagevec_release(&pvec);

cond_resched();

}

---

This function scans the page range from ;start to ;end (inclusive) and tags

all pages that have DIRTY tag set with a special TOWRITE tag. The idea is

that write_cache_pages (or whoever calls this function) will then use

TOWRITE tag to identify pages eligible for writeback. This mechanism is

used to avoid livelocking of writeback by a process steadily creating new

dirty pages in the file (thus it is important for this function to be quick

so that it can tag pages faster than a dirtying process can create them).

tag_pages_for_writeback()

---

xa_lock_irq(&mapping->i_pages);

radix_tree_for_each_tagged(slot, &mapping->i_pages, &iter, start,

PAGECACHE_TAG_DIRTY) {

if (iter.index > end)

break;

radix_tree_iter_tag_set(&mapping->i_pages, &iter,

PAGECACHE_TAG_TOWRITE);

tagged;;;

if ((tagged % WRITEBACK_TAG_BATCH) != 0)

continue;

...

}

xa_unlock_irq(&mapping->i_pages);

}

对page cache的访问有两方式;

通过mmap访问可以节省系统调用相关代码的开销;并减少一次内存拷贝;是最高效的访问方式。

mmap主要涉及两个点;

generic_file_map() 设置vma->ops为generic_file_vm_ops

vma_set_anonymous() 设置vma->ops为NULL

handle_pte_fault()

---

if (!vmf->pte) {

if (vma_is_anonymous(vmf->vma)) // check vma->ops

return do_anonymous_page(vmf);

else

return do_fault(vmf);

}

---__do_fault() -> filemap_fault()

__do_fault()负责准备好page;并且为读操作准备好page;其中使用了filemap_read_page()

do_page_mkwrite()

则负责为写操作准备好page;

比如

filemap_page_mkwrite()

---

file_update_time(vmf->vma->vm_file);

set_page_dirty(page);

---

比如

ext4_page_mkwrite()

---

/* Delalloc case is easy... */

if (test_opt(inode->i_sb, DELALLOC) &&

!ext4_nonda_switch(inode->i_sb)) {

do {

err = block_page_mkwrite(vma, vmf,

ext4_da_get_block_prep);

} while (err == -ENOSPC &&

ext4_should_retry_alloc(inode->i_sb, &retries));

goto out_ret;

}

---

如果是非delay allocation模式;还会在这里分配空间

finish_fault() -> do_set_pte() 设置页表

do_fault_around() -> filemap_map_pages()

这是一个优化;do_fault_around()尝试将发生fault周围的page都map起来

fault_dirty_shared_page() -> balance_dirty_pages_ratelimited()

这点跟正常的write系统调用一样另外;我们考虑下;系统如何感知到mmap的page上的写操作?答案是;writeprotect;我们看下函数;

handle_pte_fault()

---

// vmf->pte is not NULL and present

if (vmf->flags & FAULT_FLAG_WRITE) {

if (!pte_write(entry))

return do_wp_page(vmf);

entry = pte_mkdirty(entry);

}

---

do_wp_page()会调用到page_mkwrite;其中会调用set_page_dirty()和

file_update_time()等;

而write_cache_pages()则会调用

clear_page_dirty_for_io() -> page_mkclean()清除相关标记位buffer read IO且cache miss时;IO分为两步;

Direct IO是指;直接通过用户Buffer组装成bio提交给block层并直到设备;由此;我们可以获得以下;

direct IO的实现分为两个关键部分;

generic_file_read_iter()

-> mapping->a_ops->direct_IO()

ext2_direct_IO()

-> blockdev_direct_IO(iocb, inode, iter, ext2_get_block);

ext4_file_read_iter()

-> ext4_dio_read_iter()

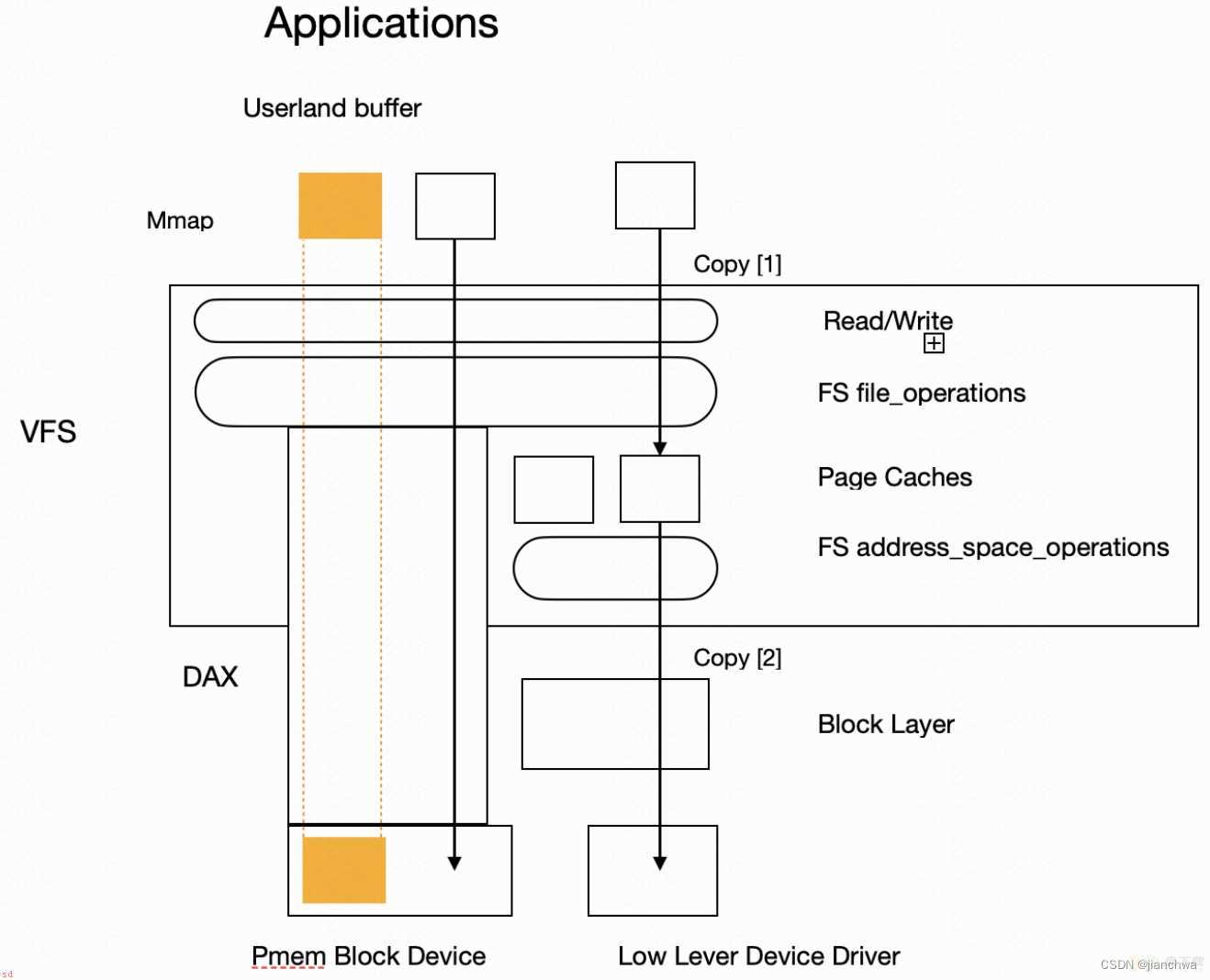

-> iomap_dio_rw(iocb, to, &ext4_iomap_ops, NULL, 0, 0);DAX;direct access extensions, 其官方定义为;

File system extensions to bypass the page cache and block layer to mmap persistent memory, from a PMEM block device, directly into a process address space

DAX的引入有两几方面的原因;

所以;DAX在整个Linux内核存储栈上开了一个旁路;bypass page cache和block layer;如下;

Pmem设备是用来支持DAX模式的块设备;嗯?既然bypass了block层;为什么还需要块设备?原因在于;DAX要兼容原来的文件系统;比如Ext2、Ext4、XFS等;而这些文件系统的元数据依然是基于块设备;所以nvdimm设备依然需要块设备访问接口;参考如下代码;

pmem_submit_bio()

---

bio_for_each_segment(bvec, bio, iter) {

if (op_is_write(bio_op(bio)))

rc = pmem_do_write(pmem, bvec.bv_page, bvec.bv_offset,

iter.bi_sector, bvec.bv_len);

else

rc = pmem_do_read(pmem, bvec.bv_page, bvec.bv_offset,

iter.bi_sector, bvec.bv_len);

if (rc) {

bio->bi_status = rc;

break;

}

}

bio_endio(bio);

---

pmem_submit_bio()

-> pmem_do_write()

//数据的写入地址;从sector转换而来

-> phys_addr_t pmem_off = sector * 512 ; pmem->data_offset;

-> write_pmem();

-> memcpy_flushcache()

-> __memcpy_flushcache()

//写入size一定是512对齐的

---

/* 4x8 movnti loop */

while (size >= 32) {

asm(;movq (%0), %%r8

;

;movq 8(%0), %%r9

;

;movq 16(%0), %%r10

;

;movq 24(%0), %%r11

;

;movnti %%r8, (%1)

;

;movnti %%r9, 8(%1)

;

;movnti %%r10, 16(%1)

;

;movnti %%r11, 24(%1)

;

:: ;r; (source), ;r; (dest)

: ;memory;, ;r8;, ;r9;, ;r10;, ;r11;);

dest ;= 32;

source ;= 32;

size -= 32;

}

---上图中;最终的拷贝操作使用到了ntstore操作;该指令可以保证指令返回时;数据落入非易失性存储介质。但是;从实现上;我们可以看到;pmem并不支持sector级更新的原子性。

这对文件系统metadata更新正确性是否会造成影响?

fs/dax.c内提供了文件系统实现dax的几个通用功能;

read/write dax访问 ;也就是调用read/write系列接口时;dax_iomap_rw()通过文件系统提供的iomap_begin/end回调;获取文件的映射;然后直接访问pmem;这个过程中bypass了page cache和block层;另外;对于写操作;参考上一小节;其使用的ntstore;可以保证数据落入掉电保护域。

dax_iomap_rw()

---

while ((ret = iomap_iter(&iomi, ops)) > 0)

---

(1) iomap_end

(2) advance iter by iter.processed

(3) iomap_begin, based on iter.inode, iter.pos, iter.len, get iomap.addr, iomap.offset, iomap.length

---

iomi.processed = dax_iomap_iter(&iomi, iter);

---

read/write on iomap.addr ; (iomap.offset - iter.pos)

---

---

dax_iomap_iter()

---

/*

iter->pos iter->len

| |

|--------------------------------|

mmapped chunk in file

iomap.offset iomap.length

*/

length = min(iter->len, iter->iomap.offset ; iter->iomap.length - iter->pos);

pos = iter->pos;

end = pos ; length;

while (pos < end) {

// Copy one page per round

unsigned offset = pos & (PAGE_SIZE - 1);

const size_t size = ALIGN(length ; offset, PAGE_SIZE);

const sector_t sector = (iomap->addr ; (pos & PAGE_MASK) - iomap->offset) >> 9;

// Get the address of pmem for direct access

ret = bdev_dax_pgoff(bdev, sector, size, &pgoff);

map_len = dax_direct_access(dax_dev, pgoff, PHYS_PFN(size),

&kaddr, NULL);

...

if (iov_iter_rw(iter) == WRITE)

xfer = dax_copy_from_iter(dax_dev, pgoff, kaddr,

map_len, iter);

else

xfer = dax_copy_to_iter(dax_dev, pgoff, kaddr,

map_len, iter);

pos ;= xfer;

length -= xfer;

...

}

---

剩下的大都是关于page fault相关;其中比较特殊的是;本来用来存储page cache address_space.i_pages中存储了一种特殊的entry;其包含以下信息;

truncate_inode_pages_range()

-> truncate_exceptional_pvec_entries()

-> dax_delete_mapping_entry()

-> __dax_invalidate_entry()

-> dax_disassociate_entry()

---

for_each_mapped_pfn(entry, pfn) {

struct page *page = pfn_to_page(pfn);

page->mapping = NULL;

page->index = 0;

}

---

此处获取pfn信息;虽然也可以通过iomap;但是此过程需要访问元数据;效率不如直接保存在

address_space.i_pages里效率高

page->mapping和page->index代表的是该page所在的文件及在文件中的偏移LOCKED;用于将一定范围锁起来;以保证整个操作的原子性;尤其是在insert pte时可能会申请内存;并且sleep;在正常的page fault中;这个操作是通过lock_page实现的;这里之所以没有使用这个标记;可能是因为在pmem的page结构体可能是保存在nvdimm上的(参考nvdimm create-namespace --map参数)

PMD or PTE;如果PMD bit设置了;就代表这个entry是pmd的;

ZERO;对应read fault on unmapped page;此时会给其分配一个zero page;zero page可能是pmd的;在有pte fault(没能分配到pmd大小的page导致pmd fault fallback to pte fault)发生的时候;是可以降级拆分的;

EMPTY;与LOCKED配合用来占坑的

之前提到read/write dax路径访问pmem的时候;写操作直接通过ntstore;可以保证数据直接落入掉电保护域。mmap的文件将pmem直接暴露给用户;并不能强制用户使用持久化编程技术;为了保证fsync的语义;dax虽然bypass page cache;但是依然使用了writeback子系统;并借此实现数据的flsuh;如下;

dax_fault_iter()

-> dax_insert_entry()

---

if (dirty)

__mark_inode_dirty(mapping->host, I_DIRTY_PAGES);

xas_reset(xas);

xas_lock_irq(xas);

if (dax_is_zero_entry(entry) || dax_is_empty_entry(entry)) {

...

} else {

xas_load(xas); /* Walk the xa_state */

}

if (dirty)

xas_set_mark(xas, PAGECACHE_TAG_DIRTY);

xas_unlock_irq(xas);

---

ext2_dax_writepages/ext4_dax_writepages/xfs_dax_writepages()

-> dax_writeback_mapping_range()

---

xas_lock_irq(&xas);

xas_for_each_marked(&xas, entry, end_index, PAGECACHE_TAG_TOWRITE) {

ret = dax_writeback_one(&xas, dax_dev, mapping, entry);

...

}

xas_unlock_irq(&xas);

---

dax_writeback_one()

---

/* Lock the entry to serialize with page faults */

dax_lock_entry(xas, entry);

pfn = dax_to_pfn(entry);

count = 1UL << dax_entry_order(entry);

index = xas->xa_index & ~(count - 1);

// Make the page become writeprotected

dax_entry_mkclean(mapping, index, pfn);

dax_flush(dax_dev, page_address(pfn_to_page(pfn)), count * PAGE_SIZE);

/*

* After we have flushed the cache, we can clear the dirty tag. There

* cannot be new dirty data in the pfn after the flush has completed as

* the pfn mappings are writeprotected and fault waits for mapping

* entry lock.

*/

xas_reset(xas);

xas_lock_irq(xas);

xas_store(xas, entry);

xas_clear_mark(xas, PAGECACHE_TAG_DIRTY);

---

这里使用mark_inode_dirty()将inode交给writeback子系统;可以保证sync语义;参考代码;

sync_filesystem()

---

writeback_inodes_sb(sb, WB_REASON_SYNC);

if (sb->s_op->sync_fs) {

ret = sb->s_op->sync_fs(sb, 0);

if (ret)

return ret;

}

...

---目前我们常见的文件系统;如本地文件系统ext2、ext4、xfs;网络文件系统nfs、ocfs2、cephfs等;都包含以下元素;

我们可以通过任何方式构造文件系统;只要最终效果包含以上元素即可。

为了构建起以上文件系统的基本元素;还需要一些额外数据;即元数据;包括;

文件系统的目的;是将一段线性的存储空间;组织成一种符合人脑习惯的形式。

非人脑习惯;可以参考对象存储;它只有一个object ID;我们可以很容易的记住;/home/will/Desktop/sourcecode/linux-stable;但是你能记住这样一段ID吗?

489f6d8b8553722f4c6f34b256aac5cacbee52eb

本小节分别看下block层几个核心指标的含义和数据来源

根据iostat man page的解释;

iostat(1) - Linux manual page

Percentage of elapsed time during which I/O requests were issued to the device (bandwidth utilization for the device). Device saturation occurs when this value is close to 100% for devices serving requests serially. But for devices serving requests in parallel, such as RAID arrays and modern SSDs, this number does not reflect their performance limits

其中对于设备利用率提到了两种解释;

接下来;我们分别做几个简单试验;内核版本为Centos 4.18:

HDD ST4000NM0035-1V4测试条件 BW Util%rw=randread bs=4k iodepth=32 0.83 MB/s 20%rw=read bs=4k iodepth=32 202 MB/s 100%rw=read bs=1M iodepth=1 203 MB/s 41% NVMe SSD HUSMR7632BHP301测试条件 BW Util%rw=randread bs=4k iodepth=32 1454 MB/s 100 %rw=randread bs=4k iodepth=32 numjobs=16 5448 MB/s 100 %fio --bs=4k --iodepth=32 --rw=randread --direct=1 --ioengine=libaio --numjobs=1 --runtime=60 --name=test --filename=/dev/sdb

对于两个测试结果;带宽利用率这个解释无疑是不成立的;下面;我们主要从IO处理能力利用率的角度分析;Util%结果的意义。

对于HDD机械盘来说;其处理IO的能力与IO Pattern强相关;参考随机小IO这个结果;

rw=randread bs=4k iodepth=32 0.83 MB/s 20%利用率只有20%是不是说还有80%的能力未被利用?然而;无论我们如何加压;只要是随机IO;带宽和利用率始终无法上去;也就是说此时设备对随机IO的处理能力;或者准确的说;是磁头的处理能力;已经达到上限;即100%我们再参考下面顺序IO的结果;

rw=read bs=4k iodepth=32 202 MB/s 100%rw=read bs=1M iodepth=1 203 MB/s 41%bs = 1M时;利用率只有40%是说磁盘还有60%的使用空间?此时203MB/s其实已经达到带宽上限;如果我们再加压;带宽基本不会再有上升;当然;利用率确实有可能上升到100%。那么;在这个测试中;利用率有什么意义呢?

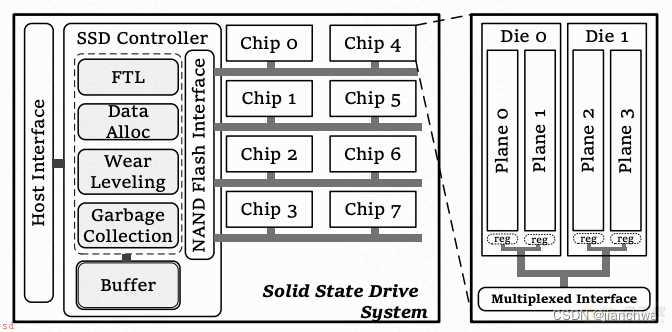

再看NVMe SDD的情况;结果更加明显;同样都是利用率100%带宽差了几倍 ;造成这种差异的原因是;SSD内部的并发能力;详情可以参考论文;这里引用里面的一张图;Parallel all the time: Plane Level Parallelism Exploration for High Performance SSDshttps://storageconference.us/2019/Research/ParallelAllTheTime.pdf

综上;从数据表现来看;util%这个指标对于表征存储设备的使用情况;参考意义不大。

那么util%到底是什么?接下来;我们对照代码看下。

参考iostat代码中util的计算方法;iostat源码Performance monitoring tools for Linux. Contribute to sysstat/sysstat development by creating an account on GitHub. https://github.com/sysstat/sysstat/blob/v12.0.0/iostat.c

https://github.com/sysstat/sysstat/blob/v12.0.0/iostat.c

write_ext_stat()

-> compute_ext_disk_stats()

---

xds->util = S_VALUE(sdp->tot_ticks, sdc->tot_ticks, itv);

---

read_sysfs_dlist_part_stat()

-> read_sysfs_file_stat()

---

i = fscanf(fp, %lu %lu %lu %lu %lu %lu %lu %u %u %u %u;,

&rd_ios, &rd_merges_or_rd_sec, &rd_sec_or_wr_ios, &rd_ticks_or_wr_sec,

&wr_ios, &wr_merges, &wr_sec, &wr_ticks, &ios_pgr, &tot_ticks, &rq_ticks);

---

filename = /sys/block/xxx/stat

tot_ticks的值来自/sys/block/xxx/stat;参考内核代码diskstat_show;tot_ticks就是disk_stats.io_ticks;该统计数据的来源为;

void update_io_ticks(struct hd_struct *part, unsigned long now)

{

unsigned long stamp;

again:

stamp = READ_ONCE(part->stamp);

if (unlikely(stamp != now)) {

if (likely(cmpxchg(&part->stamp, stamp, now) == stamp)) {

__part_stat_add(part, io_ticks, 1);

}

}

if (part->partno) {

part = &part_to_disk(part)->part0;

goto again;

}

}

blk_mq_bio_to_request()

-> blk_account_io_start()

-> update_io_ticks()

__blk_mq_end_request()

-> blk_account_io_done()

-> update_io_ticks(io_ticks的统计;取决于io start和io done两个事件的间隔;当IO延迟高于1 jiffy(HZ = 1000, 1 jiffy = 1ms)时;统计的io_ticks是少于应该统计的值的;对比测试结果;

条件 bw rwait util%rw=read bs=1M iodepth=1 203.00 MB/s 4.05 ms 41.10rw=read bs=4M iodepth=1 206.69 MB/s 11.98 ms 32.30bs = 4M时;其带宽稍高;利用率反到降低了。

其实这个算法并不是一直如此;而是经过一次修改;参考如下commit;

commit 5b18b5a737600fd20ba2045f320d5926ebbf341a

Author: Mikulas Patocka <mpatocka;redhat.com>

Date: Thu Dec 6 11:41:19 2018 -0500

block: delete part_round_stats and switch to less precise counting

We want to convert to per-cpu in_flight counters.

The function part_round_stats needs the in_flight counter every jiffy, it

would be too costly to sum all the percpu variables every jiffy, so it

must be deleted. part_round_stats is used to calculate two counters -

time_in_queue and io_ticks.

time_in_queue can be calculated without part_round_stats, by adding the

duration of the I/O when the I/O ends (the value is almost as exact as the

previously calculated value, except that time for in-progress I/Os is not

counted).

io_ticks can be approximated by increasing the value when I/O is started

or ended and the jiffies value has changed. If the I/Os take less than a

jiffy, the value is as exact as the previously calculated value. If the

I/Os take more than a jiffy, io_ticks can drift behind the previously

calculated value.

Signed-off-by: Mikulas Patocka <mpatocka;redhat.com>

Signed-off-by: Mike Snitzer <snitzer;redhat.com>

Signed-off-by: Jens Axboe <axboe;kernel.dk>

在这个commit之前;io_tick的算法为;

static void part_round_stats_single(struct request_queue *q,

struct hd_struct *part, unsigned long now,

unsigned int inflight)

{

if (inflight) {

__part_stat_add(part, time_in_queue,

inflight * (now - part->stamp));

__part_stat_add(part, io_ticks, (now - part->stamp));

}

part->stamp = now;

}在这种算法下;只要存储设备上有inflight的IO;时间就会被计入io_ticks;

甚至;在diskstat_show()中;都会调用一次该函数;所以;如果有IO hung;util%会显示100%。

此时;util%的意义更接近于;在过去的时间内;设备被使用的时间的在占比。

综上;util%不可以作为设备能力的利用率来参考。